24/03/2024

VCL_hash

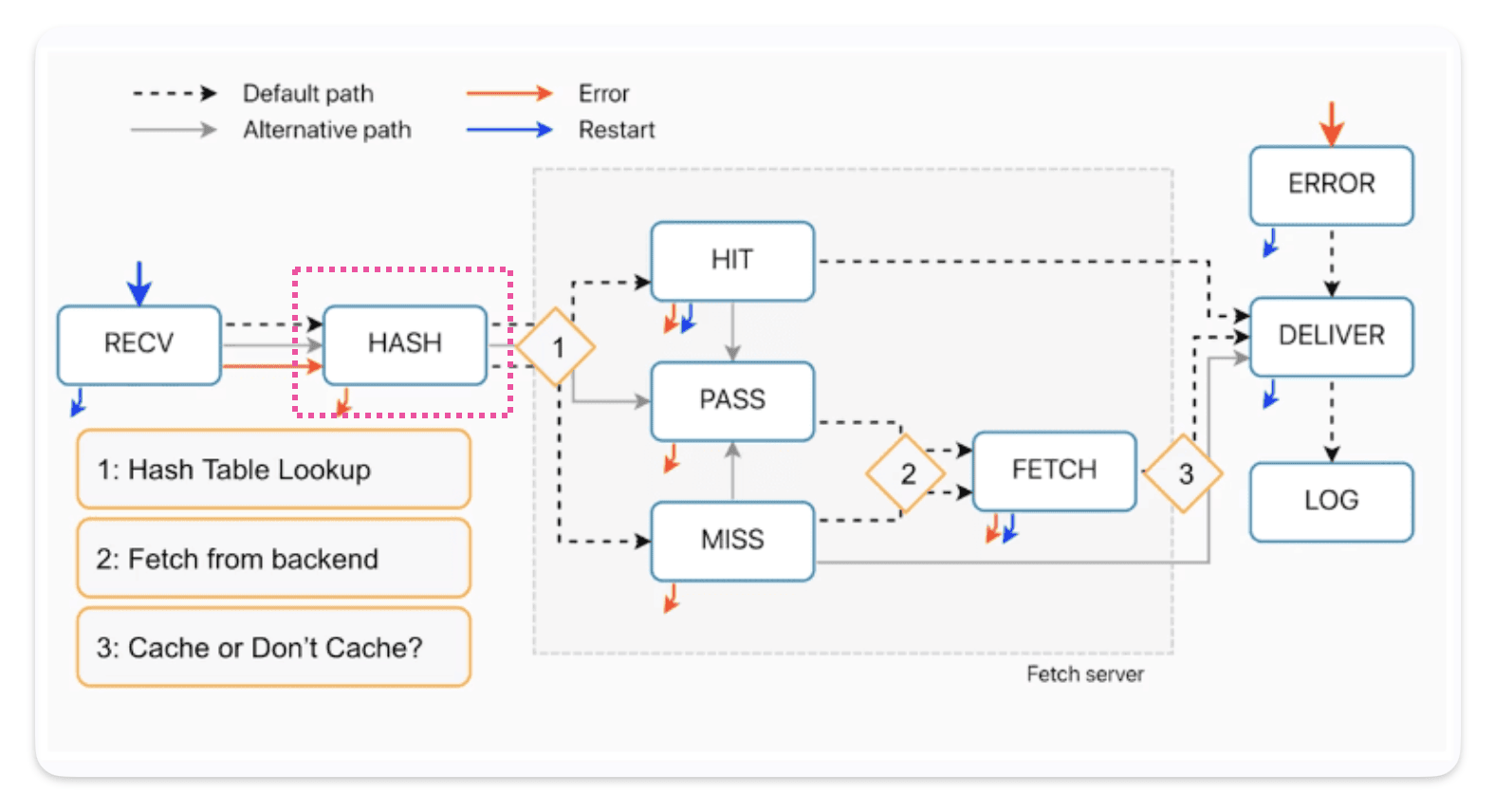

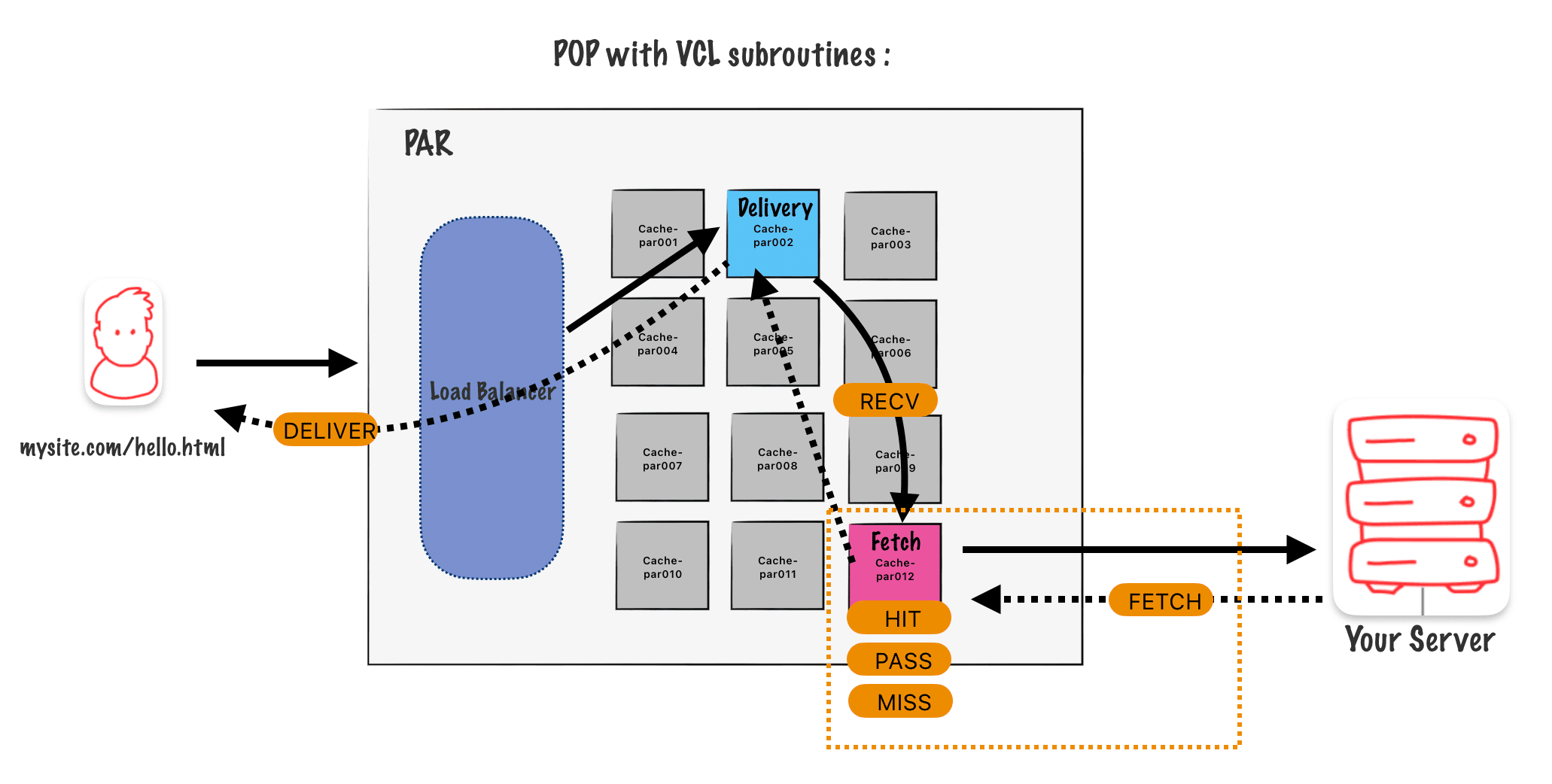

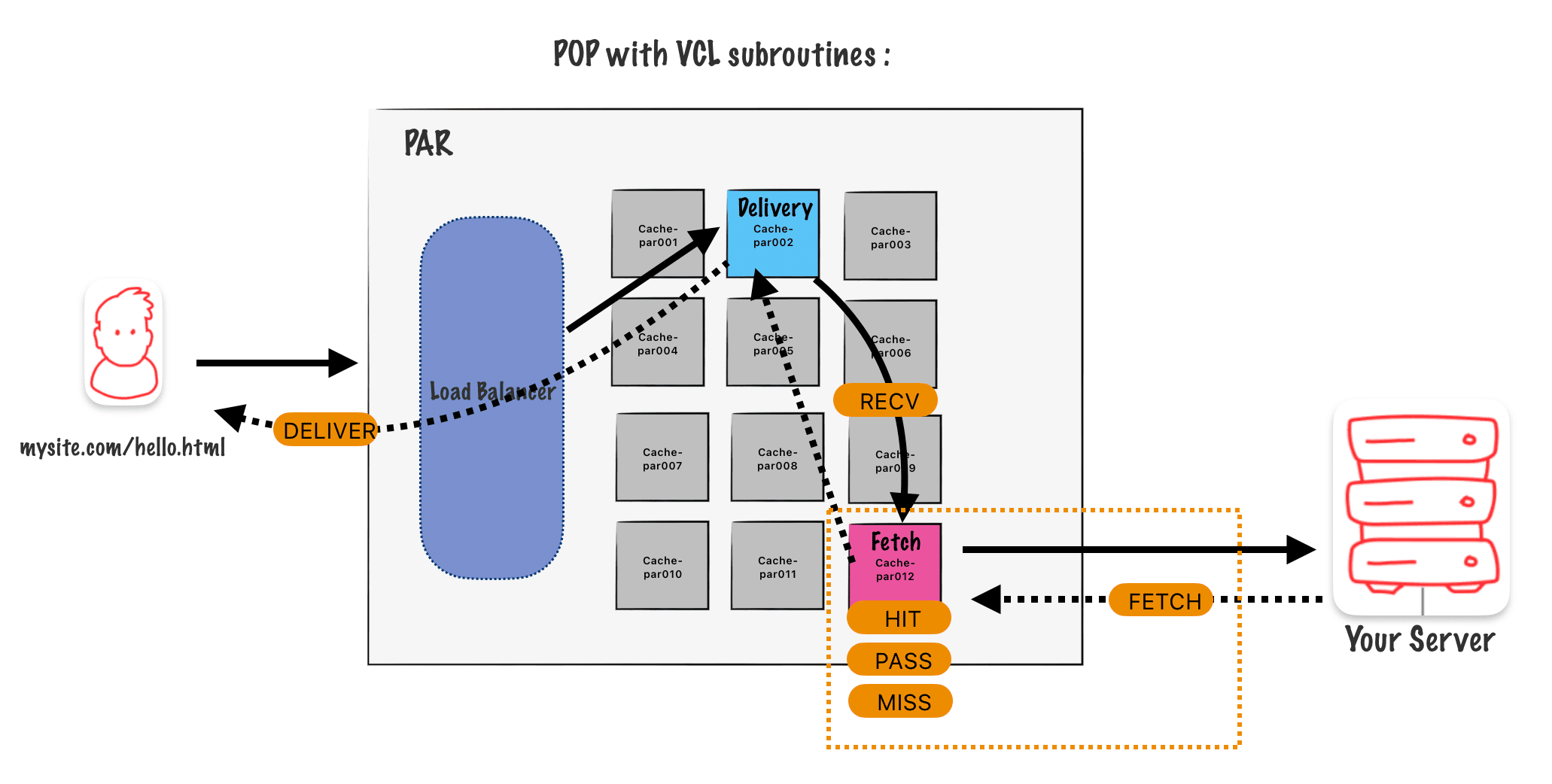

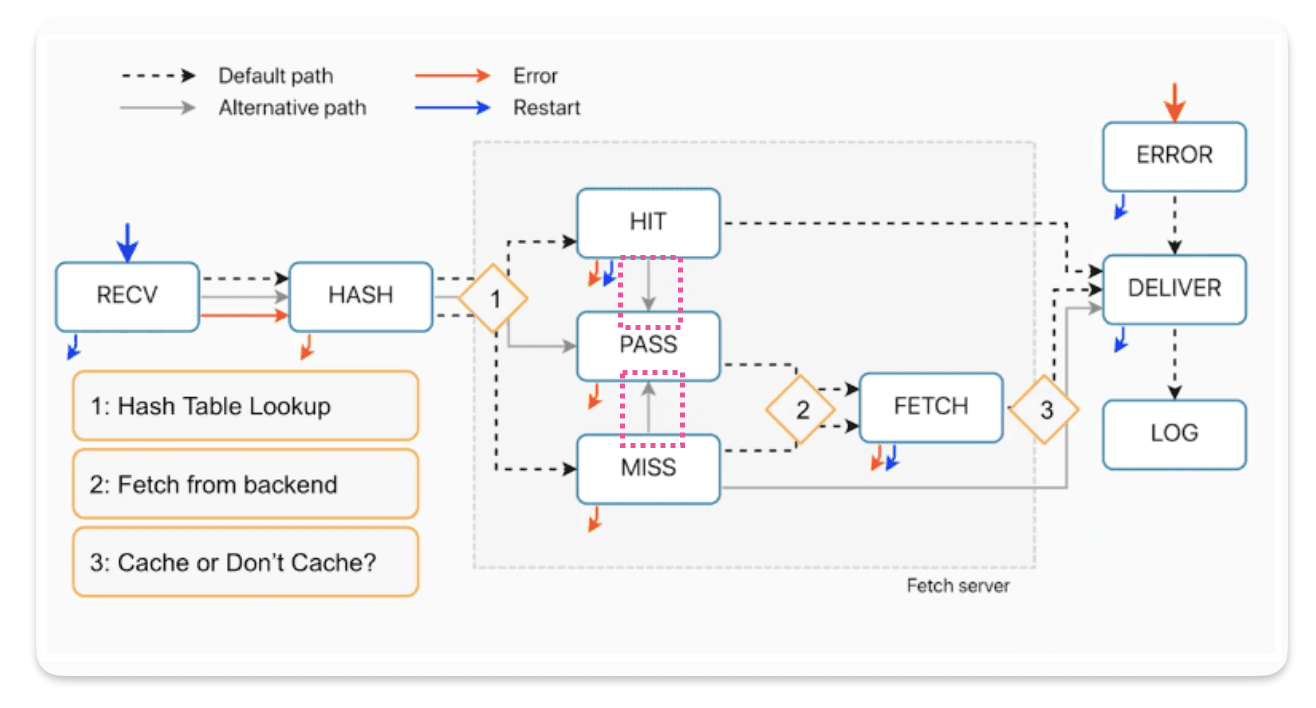

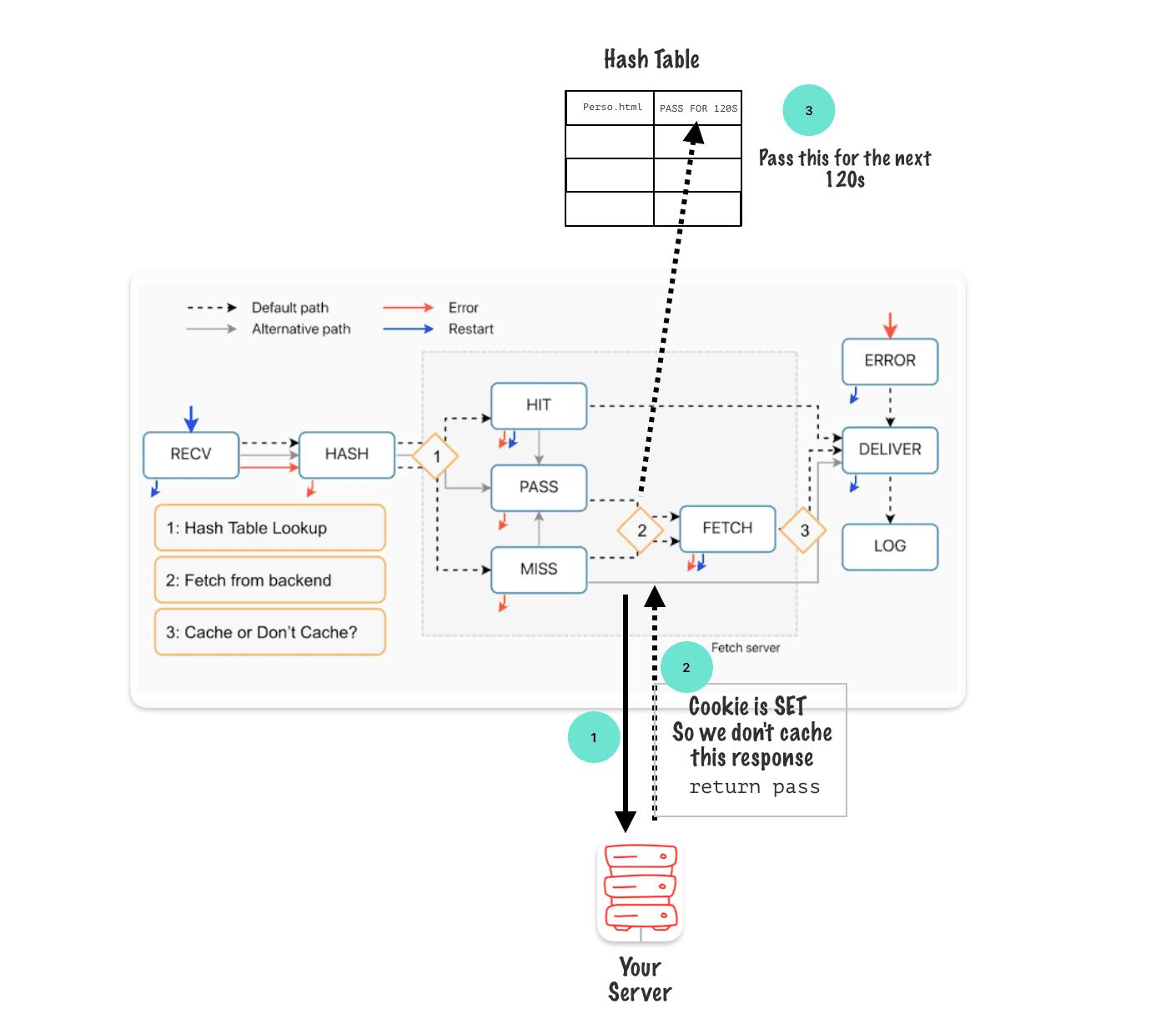

From this diagram we can see vcl_hash runs after receive and there is no way to avoid it.

Indeed, just after hash the hash table look up takes place.

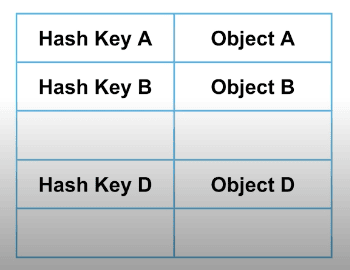

As a reminder each node has a hash table. This hash table tracks what’s in cache on that specific node.

Each request that comes through generates its own hash key.

Varnish tries to match that generated hash key with a cache response on the table.

Steps :

1) Request A arrives

2) Hash is generated from URL for request A

3) On the node we try to match this hash with an existing object mapped with this hash.

4) If this hash exists in the table, then it’s a HIT

5) If this hash does not exist in the table, then it’s a MISS

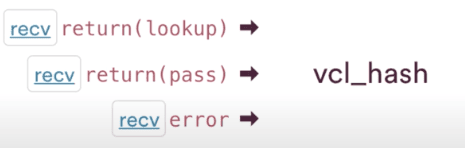

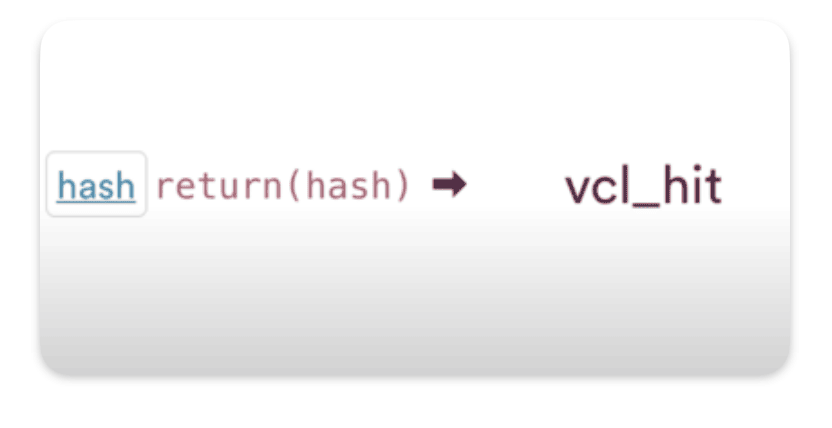

How requests come in VCL_hash ?

Everything comes from receive, no matter what requests pass through hash. Either VCL_rcv asks for a lookup in the hash table or a pass if we want the request to go to the origin.

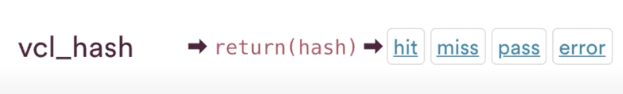

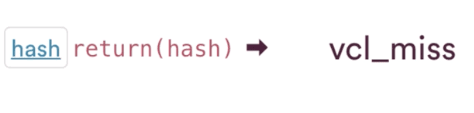

How requests exit in VCL_hash ?

The transition call out is just return hash.

The return lookup, can go to either hit or miss.

The VCL_hash subroutine in details

sub vcl_hash {

#--FASTLY HASH BEGIN

#if unspecified fall back to normal

{

set req.hash += req.url;

set req.hash += req.http.host;

set req.hash += req.vcl.generation;

return (hash);

}

#--FASTLY HASH END

}

The vcl_hash routine is relatively short, and it demonstrates how the hash key is generated.

We have already discussed how it’s generated in the purge article, especially the last part regarding the generation number. This number is used to purge as we bump the generation number by one to make it unreachable…

// www.example.com/filepath1/?abc=123

www. example.com === req.host

"/filepath1/?abc=123" === req.URL

Customize the hash key

It could be useful to customize the hash key, especially if the content varies, but the URL doesn’t.

An example, if the content varies based on the language.

{

set req.hash += req.url;

set req.hash += req.http.host;

set req.hash += req.vcl.generation;

set req.hash += req.vcl.Accept-Language;

return (hash);

}

⚠️ Warning : Adding an extra parameter to the hash will act as a purge-all at first, as all the requested data after this update will get a new hash.

⚠️ Warning : The accept-language header is a relatively safe use case since it doesn’t have much variability in it. If, on the other hand, you use a header like user agent, which lacks standardization and contains a wide range of inputs, your cache hit ratio will suffer significantly due to the large variance within that header.

👌 Good idea : A good idea would be to use the vary header before trying to tweak the hash key. The vary header will keep up to 200 variations or versions of the response object under one hash key.

VCL_hash recap :

• This is where the hash key is generated.

• Changing the Hash Key acts as a Purge All.

• See if Vary Header is better solution before changing the hash key.

• Changing the hash inputs after vcl_hash can cause issues with shielding

VCL_hit

VCL_hit runs if we find a match in the hashtable for the hashkey, in other words, it means we found the object in the cache.

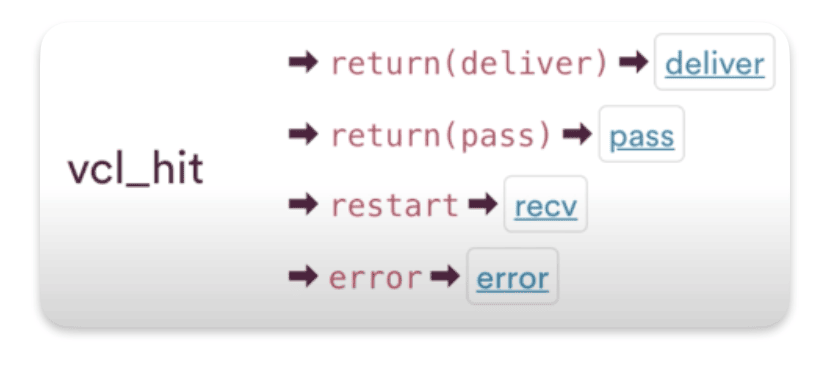

How requests come in VCL_hit ?

The only way requests go to VCL_hit is from VCL_hash, as I said earlier if we end up in vcl_hit there’s a match.

How requests exit from VCL_hit ?

To exit VCL_hit you can still pass, restart or even error. And the default exit call is return deliver.

The VCL_hit subroutine in details

sub vcl_hit {

#--FASTLY HIT BEGIN

# we cannot reach obj.ttl and obj.grace in deliver, save them when we can in vcl_hit

set req.http.Fastly-Tmp-Obj-TTL = obj.ttl;

set req.http.Fastly-Tmp-Obj-Grace = obj.grace;

{

set req.http.Fastly-Cachetype = "HIT";

}

#--FASTLY HIT END

if (!obj.cacheable) {

return(pass);

}

return(deliver);

}

Code explanation :

# we cannot reach obj.ttl and obj.grace in deliver, save them when we can in vcl_hit

set req.http.Fastly-Tmp-Obj-TTL = obj.ttl;

set req.http.Fastly-Tmp-Obj-Grace = obj.grace;

As the comment says, obj.ttl and obj.grace are not available in delivery, so we have to copy those values in temporary variables. To transfer them from one routine to another, we can use headers.

The fetch process happens on the fetch server using the subroutines hit, pass, miss, and fetch and certain variables, like the object variable, that will not be passed back to deliver.

At the opposite certain variables, like the request headers, will get passed from hit or fetch back to deliver.

{

set req.http.Fastly-Cachetype = "HIT";

}

Here, we simply set an internal header to mark the request as a HIT.

if (!obj.cacheable) {

return(pass);

}

Here if the object in cache is not cachable then we call a return(pass)

The use case here could be that at the end you run a logic that decides to pass, even if we are already in vlc_hit. Then we could set obj.cacheable = false.

return(deliver);

Then of course we finish up with return deliver, that’s our default call, to exit VCL hit.

Takeaways

In vcl_hit it’s always possible to request a fresh version from the origin by your calling return pass. The cached object still remains in cache.

VCL_miss

VCL_miss like VCL_hit and pass and fetch are on the fetch server.

How requests come in VCL_miss ?

VCL_miss is triggered when :

- We don’t have an entry at all for that hash key

- We have a match for the hash key, but the object has expired ( Its TTL has run out )

NB : It’s always possible to call a PASS in MISSif you change your min at last minute.

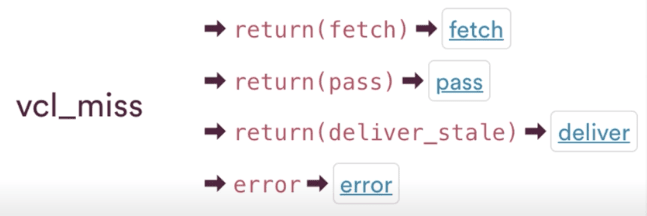

How requests exit from VCL_miss ?

Our default exit call is a return(fetch), We are going to go and fetch a response from the backend.

We can call return(pass) to go to the pass sub-routine instead.

We can call return(deliver_stale) when the TTL have expiered but we set rules to allow the delivery of a stale object with deliver stale option or stale while revalidate or stale if error

Last transition call is error, which takes us to VCL error

The VCL_miss subroutine in details

sub vcl_miss {

#--FASTLY MISS BEGIN

# this is not a hit after all, clean up these set in vcl_hit

unset req.http.Fastly-Tmp-Obj-TTL;

unset req.http.Fastly-Tmp-Obj-Grace;

{

if (req.http.Fastly-Check-SHA1) {

error 550 "Doesnt exist";

}

#--FASTLY BEREQ BEGIN

{

{

if (req.http.Fastly-FF) {

set bereq.http.Fastly-Client = "1";

}

}

{

# do not send this to the backend

unset bereq.http.Fastly-Original-Cookie;

unset bereq.http.Fastly-Original-URL;

unset bereq.http.Fastly-Vary-String;

unset bereq.http.X-Varnish-Client;

}

if (req.http.Fastly-Temp-XFF) {

if (req.http.Fastly-Temp-XFF == "") {

unset bereq.http.X-Forwarded-For;

} else {

set bereq.http.X-Forwarded-For = req.http.Fastly-Temp-XFF;

}

# unset bereq.http.Fastly-Temp-XFF;

}

}

#--FASTLY BEREQ END

#;

set req.http.Fastly-Cachetype = "MISS";

}

#--FASTLY MISS END

return(fetch);

}

Code explanation :

unset req.http.Fastly-Tmp-Obj-TTL;

unset req.http.Fastly-Tmp-Obj-Grace;

If our request went to hit but restarted and ended up in MISS we clean those headers HIT set.

if (req.http.Fastly-Check-SHA1) {

error 550 "Doesnt exist";

}

A condition for Fastly check SHa1, giving an error 550 code. This is for internal testing only for Fastly.

{

{

if (req.http.Fastly-FF) {

set bereq.http.Fastly-Client = "1";

}

}

Checking to see if the Fastly forwarded for header is present. If it is, then we set a back-end request header for the Fastly client. This allows customers and developers to use a header to check if this request is coming from Fastly.

# do not send this to the backend

unset bereq.http.Fastly-Original-Cookie;

unset bereq.http.Fastly-Original-URL;

unset bereq.http.Fastly-Vary-String;

unset bereq.http.X-Varnish-Client;

Those headers are specific to Fastly, and the idea here is to remove them before he reaches the backend.

if (req.http.Fastly-Temp-XFF) {

if (req.http.Fastly-Temp-XFF == "") {

unset bereq.http.X-Forwarded-For;

} else {

set bereq.http.X-Forwarded-For = req.http.Fastly-Temp-XFF;

}

# unset bereq.http.Fastly-Temp-XFF;

}

Modifications to the Fastly temp X forwarded for header

#--FASTLY BEREQ END

#;

set req.http.Fastly-Cachetype = "MISS";

}

#--FASTLY MISS END

return(fetch);

}

We are setting again the Fastly cache type as miss for internal use.

Then we have the transition call to default transition call of return fetch that will actually trigger the backend request

Takeways

Last Stop before the request goes to the backend

• Last minute changes to request.

• Last chance to PASS on the request.

• This is where WAF lives, before the request to the backend ( where we plug it )

VCL_pass

Pass is the sub routine we use for the requests we know we don’t want to cache the response.

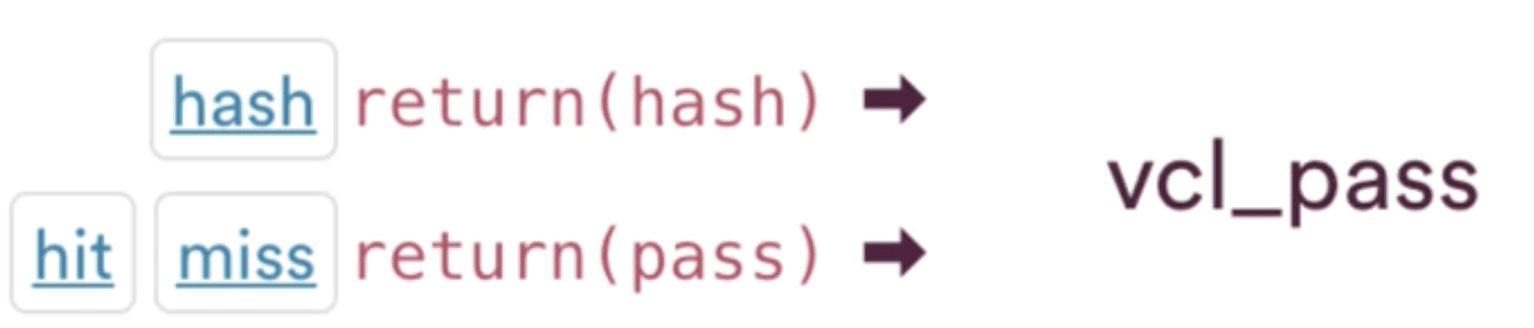

How requests come in VCL_pass ?

You can get to VCL_pass through, receive, ie, calling return pass there, something we already discussed in this article

You can also endup in PASS through miss or hit as discussed in the above sections.

Hit for pass :

The hit for pass behavior, we execute a return pass in VCL_fetch and the hash table for that node gets an entry for that hash key that says, “Pass for the next default of 120 seconds.” As explained here

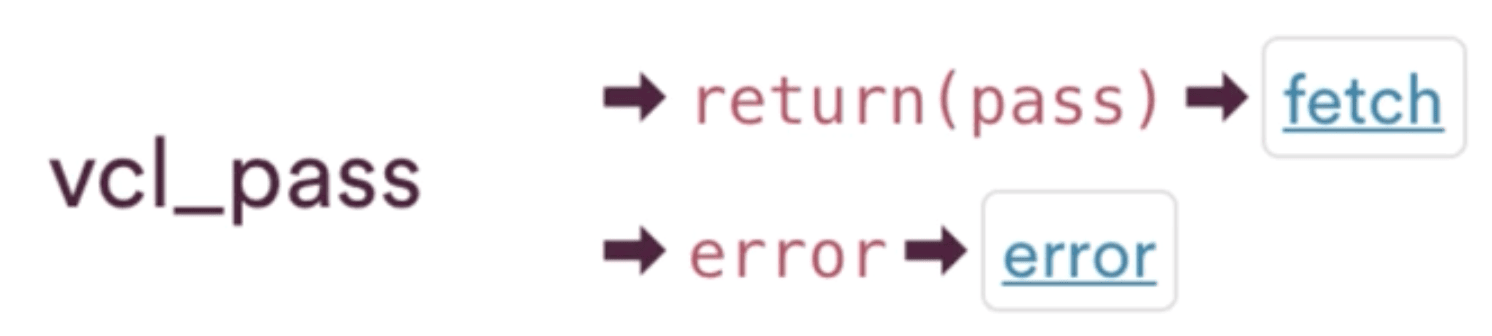

How requests exit from VCL_pass ?

Our exit call, our exit transition is return pass.ou can also error out of pass as well.

VCL_pass in detail

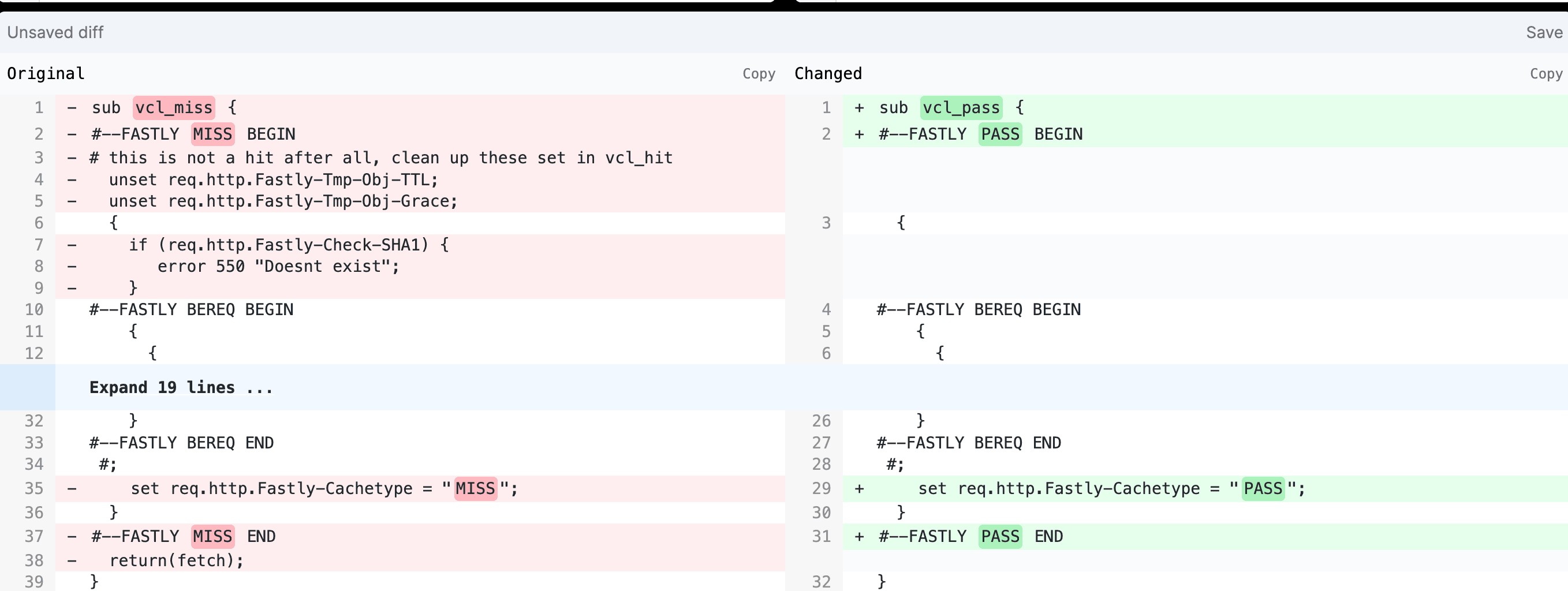

THe code is verry similar to VCL_miss ooking at them in a diff checker with VCL miss on the left and VCL pass on the right, we can see that there’s just a couple of things that have been removed for pass.

Unsetting of those temporary TTLs and temporary grace request headers, they’re not being unset in pass. Those may be passed to the origin. Also, we don’t have the Fastly check SHA1 in place. That’s also been removed. The other thing to point out that’s missing is looking at the end of VCL pass, there’s no default return pass present. Even though it was listed on our diagram there, it’s not actually present and it doesn’t need to be. For the case of VCL pass, the default transition call is there in the background. Since there’s literally only one way to transition out of this subroutine except from error, really the only call is going to be return pass

Takeways for VCL_pass

Last Stop before the request goes to the backend

• Last minute changes to request.

• Best Practice: Any change in MISS, should be made in PASS

and vise versa.

• This is where also WAF lives, before the request to the backend.

Takeways Hash, Hit, Miss and Pass Subroutines

• Vcl_hash is for generating the Hash Key. Proceed with caution.

• Changing hash key inputs after vc|_hash combined w/ shielding affects purge.

• You can still PASS from vcl_hit & vcl_miss.

• Last min changes should happen in both vcl_miss & vcl_pass