18/03/2024

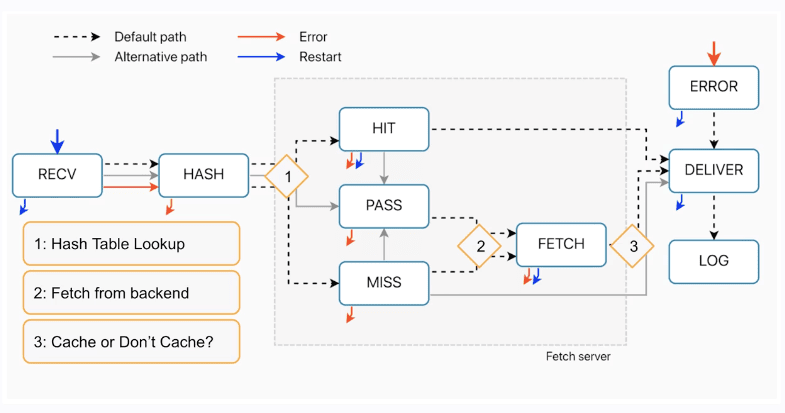

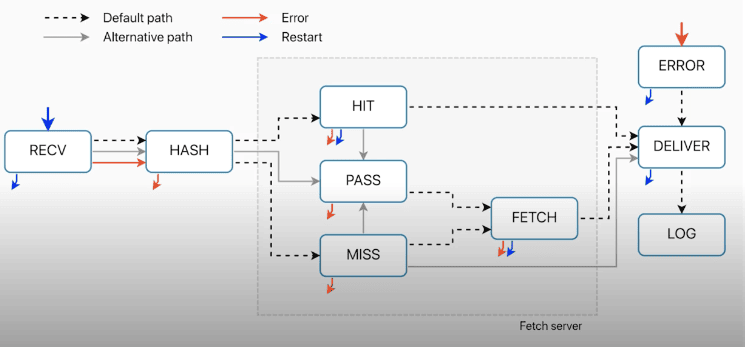

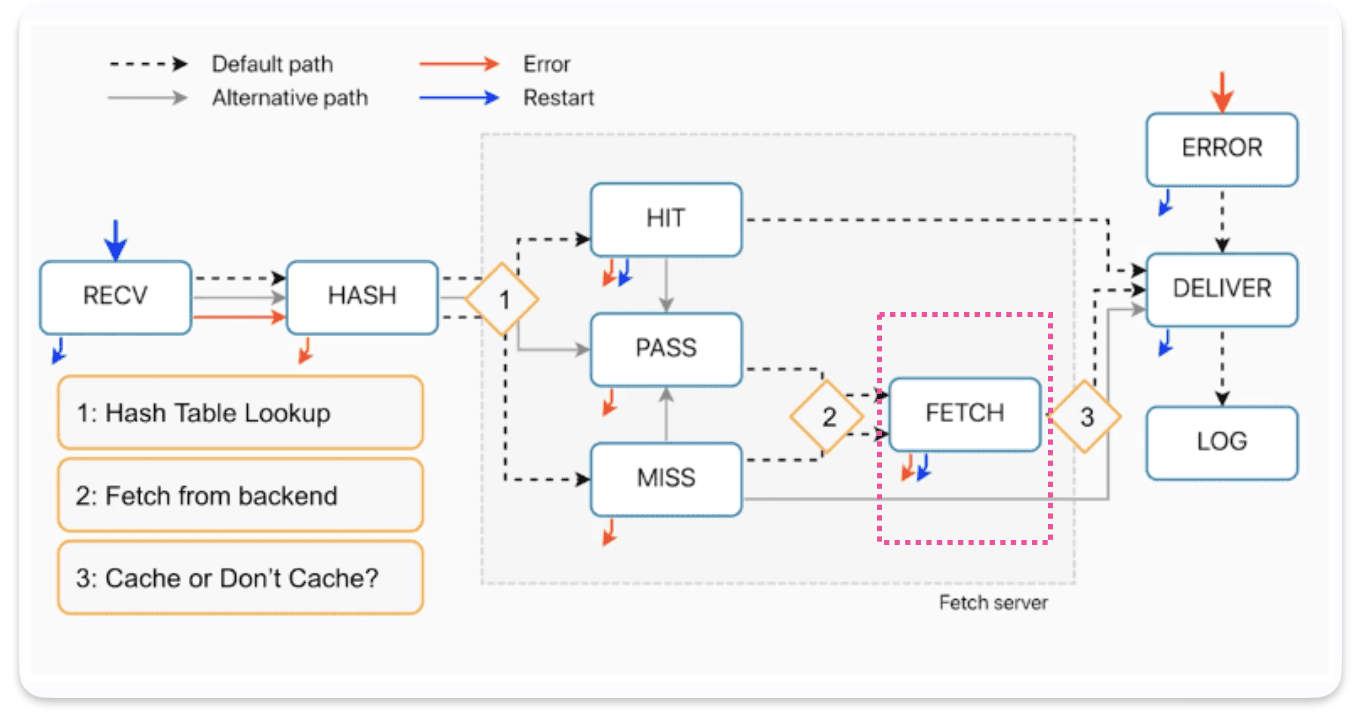

Varnish state machine flow

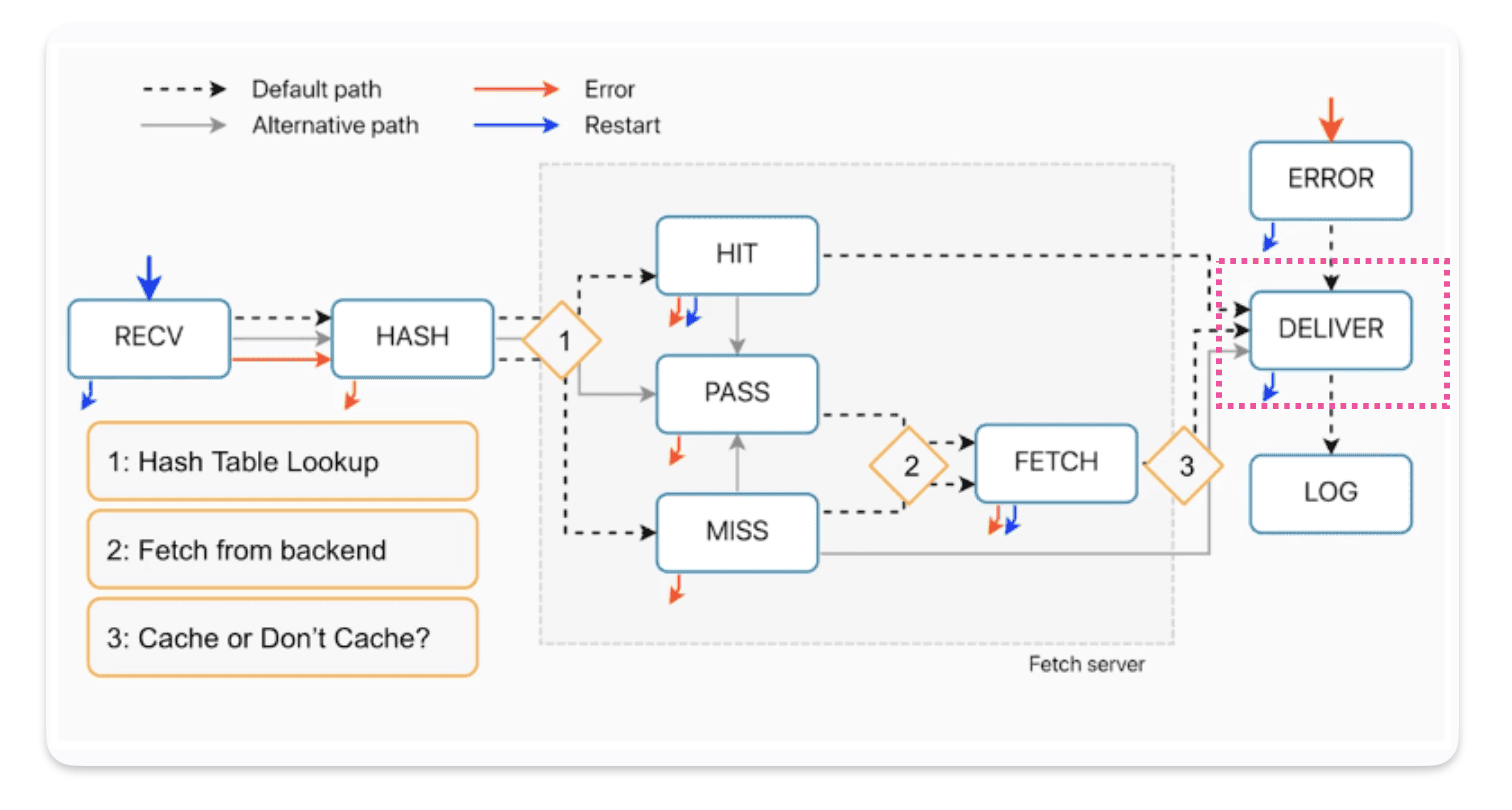

When a request comes into Fastly, it’s assigned out from a load balancer to one of the nodes on the POP, and that will act as the delivery node.

Then there is a domain lookup where the request host header, the value there, say example.com is looked up on a giant table on the node to see which service configuration has that matching domain. The attached service configuration is then run and this is what we’re looking at here is all the subroutines that make up that service configuration.

We assume that there’s caching that’s attempting to go on with this request. So starting on our left, when a request comes in, receive is the first subroutine to run. We can follow that dash line as it goes from receive to hash.

After hash is the hash table lookup on the node to see if the item is already cached.

And then it’ll split either between hit or miss

This becomes a decision point:

- If we have the item already, it’s a hit and we run the hit subroutine.

Following through from hit, we can follow that same dotted line. We have the item in cache. It’s handed over to the deliver subroutine, and deliver runs. And then it’s handed off to the end user as a response. Going back to our hash table after the hash subroutine, if we don’t have the item already on the table, we don’t have a match. It’s not in cache, it’s a miss. -

If do not have the item already, it’s a miss. Then the miss subroutine will run. The second action or process, and this is the fetch from backend. So if we don’t have the item, we of course need to request it from the backend. This is where we fetch the object. After this process, we then run the fetch subroutine.

After we run fetch we have an other process and this is where we actually cache or don’t cache the response object. Either way, whatever comes out of fetch, whether we cache it or not is handed off to then deliver, the deliver subroutine runs, and then we send off the actual response to the end user.

VCL_recv

This is our first subroutine, it’s the first point of contact and it’s used for :

- normalizing clients’ input

- normalize headers

- normalize the request URL

- Strip some query parameters

- Switching between the backends (allocating a specific backend to a request)

- Apply authentication ( check JWT, API Keys, geolocation, Ips, Paywallls…)

- Triggering synthetic responses for known paths

- PASS on request that should not be cahed

VCL_recv is the best place to make those changes to standardize client’s inputs It’s very similar to a an Node.js express Middleware.

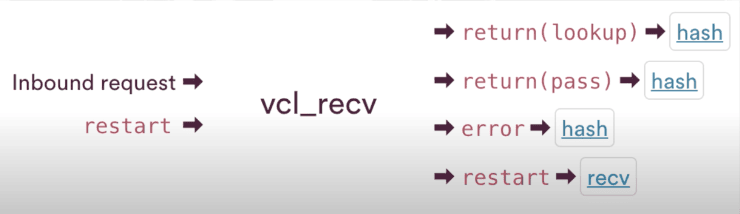

How request come and exit from VCL_recv subroutine ?

How they enter in VCL_recv ?

- inbound request : The first way requests enter in VCL_recv

- restart : If restart is called, you leave whatever subroutine you’re in and it returns you to the beginning of the VCL flow

How they exit in VCL_recv ?

- return lookup : The default way to exit, Return lookup is referencing a lookup call on the hash table. You’re looking to see if something is in cache

- return pass : If you don’t want to cache

- error : Calling error takes you to the error subroutine

- restart : If restart is called, you leave whatever subroutine you’re in and it returns you to the beginning of the VCL flow

Where requests go after VCL_recv ?

After receive all requests go to hash, but after they go to hash, they are all going to end up at different places.

Default path : Hash then cache table lookup then eihter a cache HIT or cache MISS

Calling Pass in receive : Hash which generates hash key goes to that hash table, but then goes to pass.

Error : Hash ad then straight to error ( as we don’t want to cache errors )

The VCL_recv subroutine in details

sub vcl_recv {

#--FASTLY RECV BEGIN

if (req.restarts == 0) {

if (!req.http.X-Timer) {

set req.http.X-Timer = "S" time.start.sec "." time.start.usec_frac;

}

set req.http.X-Timer = req.http.X-Timer ",VS0";

}

declare local var.fastly_req_do_shield BOOL;

set var.fastly_req_do_shield = (req.restarts == 0);

# default conditions

# end default conditions

#--FASTLY RECV END

if (req.request != "HEAD" && req.request != "GET" && req.request != "FASTLYPURGE") {

return(pass);

}

return(lookup);

}

Let’s analyze this code :

if (req.restarts == 0) {

if (!req.http.X-Timer) {

set req.http.X-Timer = "S" time.start.sec "." time.start.usec_frac;

}

set req.http.X-Timer = req.http.X-Timer ",VS0";

}

Here is the inclusion of a timer header req.http.X-Timer

This is used for our timing metrics. We add it at the very beginning once we get it in receive, and it gets tied up later on in deliver at the end. This is only initialized, if the number of restarts is zero.

declare local var.fastly_req_do_shield BOOL;

set var.fastly_req_do_shield = (req.restarts == 0);

Then we have some shield variables that are being declared and set.

if (req.request != "HEAD" && req.request != "GET" && req.request != "FASTLYPURGE") {

return(pass);

}

If the request method is not a head, and the request method is not a get, and the request is not a Fastly purge call, then it’ll return pass. So if it’s not any of these three things, we are not going to cache it, we call the pass behavior.

return(lookup);

Then at the end of the receive subroutine, we have our default transition call, which is returned lookup.

VCL_fetch

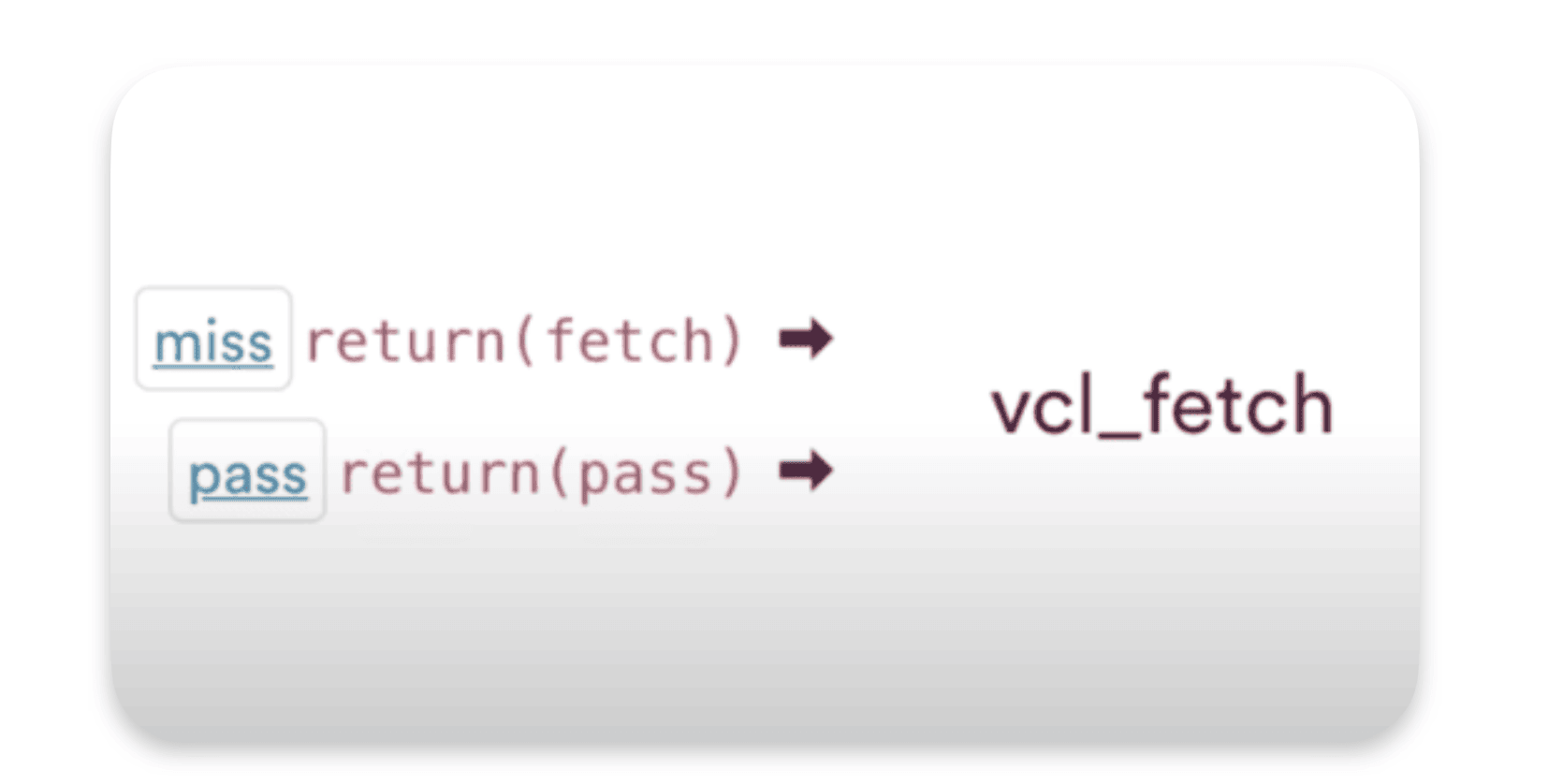

How requests enter in VCL_fetch ?

We go to fetch after a PASS or a MISS, if it’s a PASS then we should get the data from our backend. Same thing for MISS, as we don’t have it in the cache we have to grab the data from our backend and then put it in the cache.

VCL_fetch it’s used for :

- Set a default TTL

- Set specific TTLs for content based on things like file path, content type or other relevant variables.

- Modify or remove response headers set from the origin or adding entirely new ones.

- configure rules for serving stale content, using

beresp.stale-while-revalidateand orberesp.stale-if-error

When we get that backend response before fetch runs, Varnish looks for the presence of any cache control headers that Fastly considers valid. If we find them and we find values that we can use, we will use them to set the TTL for this particular response object.

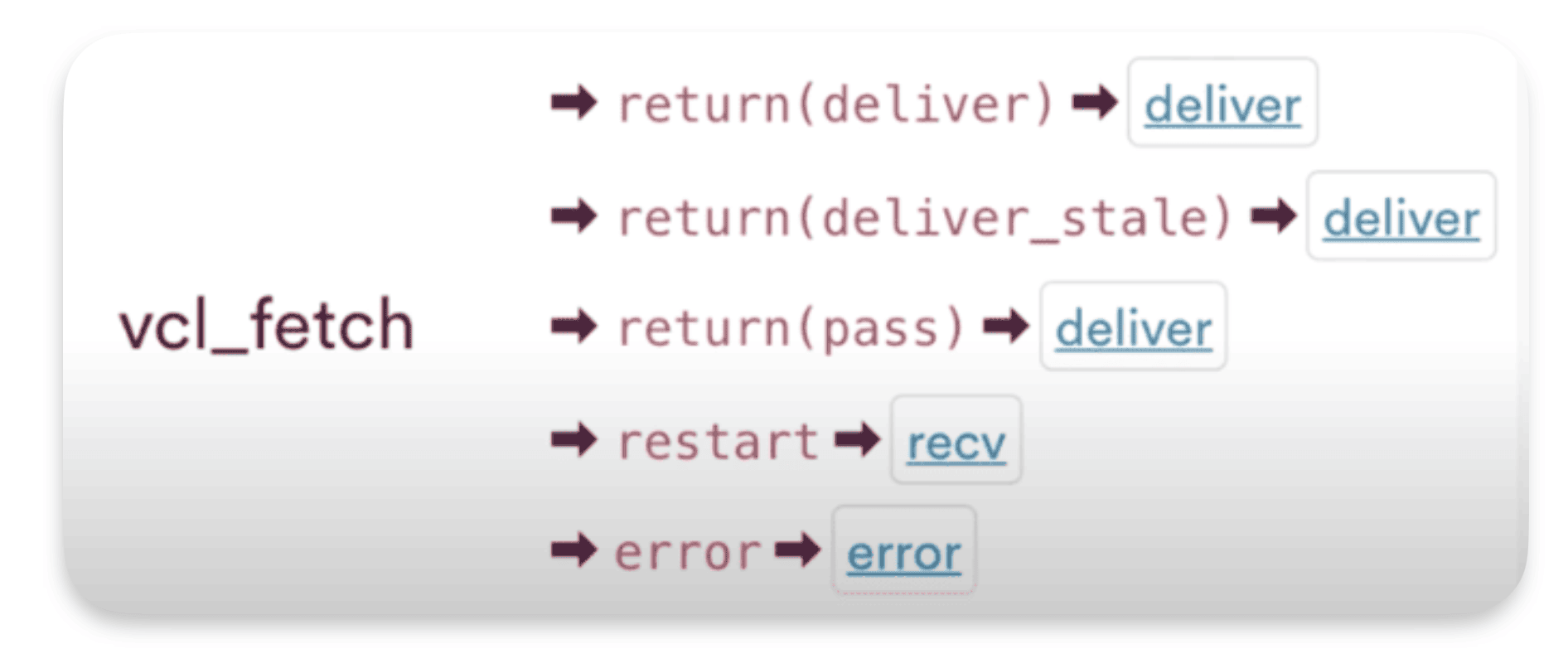

How requests exit in VCL_fetch ?

To exit VCL fetch, the default transition is return deliver.

- return deliver

- return deliver stale : Deliver stale is related to those serving stale content options

- return pass : Will not cache, and it will still head off to deliver.

- restart : goes back to receive

- error : which will bypass receive and go straight to error.

By the way we are expecting to cache the responses that we get from the backend, and this would be the default transition to do so.

The VCL_fetch routine in details

sub vcl_fetch {

declare local var.fastly_disable_restart_on_error BOOL;

#--FASTLY FETCH BEGIN

# record which cache ran vcl_fetch for this object and when

set beresp.http.Fastly-Debug-Path = "(F " server.identity " " now.sec ") " if(beresp.http.Fastly-Debug-Path, beresp.http.Fastly-Debug-Path, "");

# generic mechanism to vary on something

if (req.http.Fastly-Vary-String) {

if (beresp.http.Vary) {

set beresp.http.Vary = "Fastly-Vary-String, " beresp.http.Vary;

} else {

set beresp.http.Vary = "Fastly-Vary-String, ";

}

}

#--FASTLY FETCH END

if (!var.fastly_disable_restart_on_error) {

if ((beresp.status == 500 || beresp.status == 503) && req.restarts < 1 && (req.request == "GET" || req.request == "HEAD")) {

restart;

}

}

if(req.restarts > 0 ) {

set beresp.http.Fastly-Restarts = req.restarts;

}

if (beresp.http.Set-Cookie) {

set req.http.Fastly-Cachetype = "SETCOOKIE";

return (pass);

}

if (beresp.http.Cache-Control ~ "private") {

set req.http.Fastly-Cachetype = "PRIVATE";

return (pass);

}

if (beresp.status == 500 || beresp.status == 503) {

set req.http.Fastly-Cachetype = "ERROR";

set beresp.ttl = 1s;

set beresp.grace = 5s;

return (deliver);

}

if (beresp.http.Expires || beresp.http.Surrogate-Control ~ "max-age" || beresp.http.Cache-Control ~"(?:s-maxage|max-age)") {

# keep the ttl here

} else {

# apply the default ttl

set beresp.ttl = 3600s;

}

return(deliver);

}

Let’s explore the basic code in VCL_fetch

set beresp.http.Fastly-Debug-Path = "(F " server.identity " " now.sec ") " if(beresp.http.Fastly-Debug-Path, beresp.http.Fastly-Debug-Path, "");

Fastly debug headers can collect and expose that information when you send those headers as a request header. It’ll add in some additional response debugging headers.

To get this header in your response call one of you url with this header : 'Fastly-Debug': '1'

if (req.http.Fastly-Vary-String) {

if (beresp.http.Vary) {

set beresp.http.Vary = "Fastly-Vary-String, " beresp.http.Vary;

} else {

set beresp.http.Vary = "Fastly-Vary-String, ";

}

}

A generic mechanism to check if your backend uses the Vary header which is used when the reponse vary based on something ( User-agent for example )

if (!var.fastly_disable_restart_on_error) {

if ((beresp.status == 500 || beresp.status == 503) && req.restarts < 1 && (req.request == "GET" || req.request == "HEAD")) {

restart;

}

}

If you get a 500 or a 503 and the number of restarts is less than one and the request type is a get or a head, then it will cause a restart to happen.

This mechanism will be triggered only once (req.restarts < 1)

if(req.restarts > 0 ) {

set beresp.http.Fastly-Restarts = req.restarts;

}

If the number of restarts is greater than zero. So we’ve already restarted. Then we’re going to set a backend response header for Fastly restarts equal to the number of restarts

if (beresp.http.Set-Cookie) {

set req.http.Fastly-Cachetype = "SETCOOKIE";

return (pass);

}

If there is a set cookie header set by the backend. Then this response is unique to the user and we don’t cache it.

if (beresp.http.Cache-Control ~ "private") {

set req.http.Fastly-Cachetype = "PRIVATE";

return (pass);

}

If control header has a value of private then we pass.

if (beresp.status == 500 || beresp.status == 503) {

set req.http.Fastly-Cachetype = "ERROR";

set beresp.ttl = 1s;

set beresp.grace = 5s;

return (deliver);

}

If the response from the backend is an error a 500 or a 503 then we’re cashing them for just a second set beresp.ttl = 1s;

This one second gap where we temporarily serve this from cache can help offload the strain to that origin and help it to recover and that’s why we just do a return deliver because we are caching that response, but only for one second.

if (beresp.http.Expires || beresp.http.Surrogate-Control ~ "max-age" || beresp.http.Cache-Control ~"(?:s-maxage|max-age)") {

# keep the ttl here

} else {

# apply the default ttl

set beresp.ttl = 3600s;

}

So if there’s an expires header, if there’s a surrogate control value with max age or a cache control value with either surrogate max age or max age, that implies that we would be able to pull a TTL out of those values and we would keep the TTL

return(deliver);

Then if none of these other conditions trigger a return pass or return deliver, we always have our default return deliver without any condition at the end of the subroutine.

VCL_deliver

Deliver is the last set routine before the log subroutine runs. Deliver runs before the first byte of the response is sent to the user. It’s also the last chance to modify your response, headers…

Deliver runs on every single response no matter if the response comes from the cache etc…

It’s an ideal place for adding debugging information or user specific session data that cannot be shared with other users ( adding debug headers…)

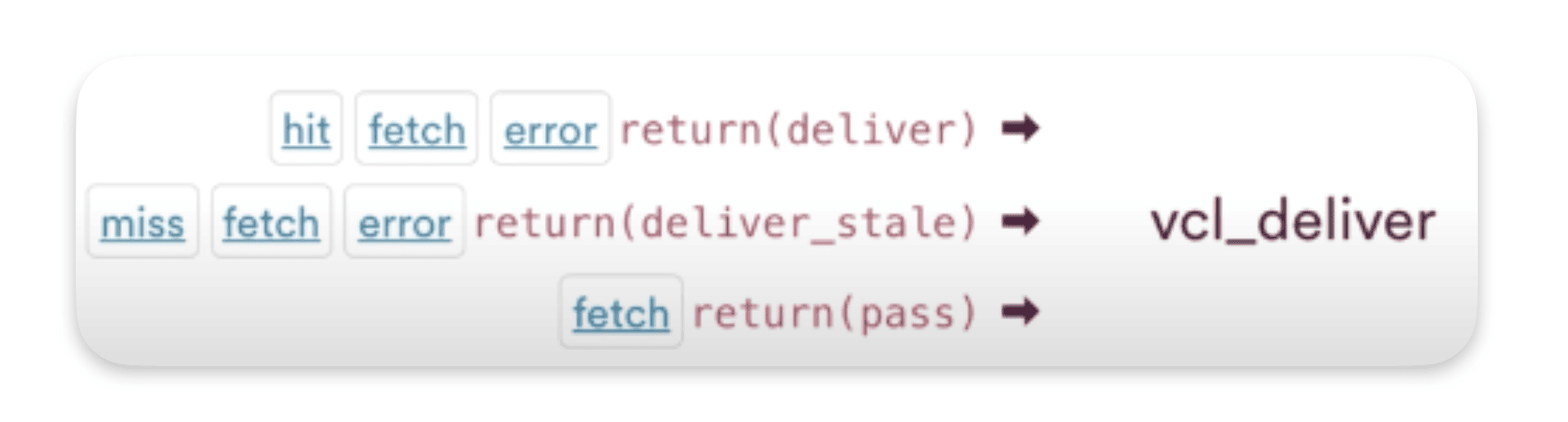

How requests enter in VCL_deliver ?

- hit fetch error

- miss fetch *error**

- fetch :

return(pass):from the fetch subroutine you can also call return pass to not cache and pass off the response to VCL deliver.

How requests exit in VCL_deliver ?

- return deliver : the transition to exit VCL deliver is return deliver. That is the default, it is referencing itself.

- restart : restart go back to the beginning at receive.

Let’s explore the VCL for deliver :

sub vcl_deliver {

#--FASTLY DELIVER BEGIN

# record the journey of the object, expose it only if req.http.Fastly-Debug.

if (req.http.Fastly-Debug || req.http.Fastly-FF) {

set resp.http.Fastly-Debug-Path = "(D " server.identity " " now.sec ") "

if(resp.http.Fastly-Debug-Path, resp.http.Fastly-Debug-Path, "");

set resp.http.Fastly-Debug-TTL = if(obj.hits > 0, "(H ", "(M ")

server.identity

if(req.http.Fastly-Tmp-Obj-TTL && req.http.Fastly-Tmp-Obj-Grace, " " req.http.Fastly-Tmp-Obj-TTL " " req.http.Fastly-Tmp-Obj-Grace " ", " - - ")

if(resp.http.Age, resp.http.Age, "-")

") "

if(resp.http.Fastly-Debug-TTL, resp.http.Fastly-Debug-TTL, "");

set resp.http.Fastly-Debug-Digest = digest.hash_sha256(req.digest);

} else {

unset resp.http.Fastly-Debug-Path;

unset resp.http.Fastly-Debug-TTL;

unset resp.http.Fastly-Debug-Digest;

}

# add or append X-Served-By/X-Cache(-Hits)

{

if(!resp.http.X-Served-By) {

set resp.http.X-Served-By = server.identity;

} else {

set resp.http.X-Served-By = resp.http.X-Served-By ", " server.identity;

}

set resp.http.X-Cache = if(resp.http.X-Cache, resp.http.X-Cache ", ","") if(fastly_info.state ~ "HIT(?:-|\z)", "HIT", "MISS");

if(!resp.http.X-Cache-Hits) {

set resp.http.X-Cache-Hits = obj.hits;

} else {

set resp.http.X-Cache-Hits = resp.http.X-Cache-Hits ", " obj.hits;

}

}

if (req.http.X-Timer) {

set resp.http.X-Timer = req.http.X-Timer ",VE" time.elapsed.msec;

}

# VARY FIXUP

{

# remove before sending to client

set resp.http.Vary = regsub(resp.http.Vary, "Fastly-Vary-String, ", "");

if (resp.http.Vary ~ "^\s*$") {

unset resp.http.Vary;

}

}

unset resp.http.X-Varnish;

# Pop the surrogate headers into the request object so we can reference them later

set req.http.Surrogate-Key = resp.http.Surrogate-Key;

set req.http.Surrogate-Control = resp.http.Surrogate-Control;

# If we are not forwarding or debugging unset the surrogate headers so they are not present in the response

if (!req.http.Fastly-FF && !req.http.Fastly-Debug) {

unset resp.http.Surrogate-Key;

unset resp.http.Surrogate-Control;

}

if(resp.status == 550) {

return(deliver);

}

#default response conditions

#--FASTLY DELIVER END

return(deliver);

}

Let’s explore the deliver sub routine in details

if (req.http.Fastly-Debug || req.http.Fastly-FF) {

set resp.http.Fastly-Debug-Path = "(D " server.identity " " now.sec ") "

if(resp.http.Fastly-Debug-Path, resp.http.Fastly-Debug-Path, "");

set resp.http.Fastly-Debug-TTL = if(obj.hits > 0, "(H ", "(M ")

server.identity

if(req.http.Fastly-Tmp-Obj-TTL && req.http.Fastly-Tmp-Obj-Grace, " " req.http.Fastly-Tmp-Obj-TTL " " req.http.Fastly-Tmp-Obj-Grace " ", " - - ")

if(resp.http.Age, resp.http.Age, "-")

") "

if(resp.http.Fastly-Debug-TTL, resp.http.Fastly-Debug-TTL, "");

set resp.http.Fastly-Debug-Digest = digest.hash_sha256(req.digest);

} else {

unset resp.http.Fastly-Debug-Path;

unset resp.http.Fastly-Debug-TTL;

unset resp.http.Fastly-Debug-Digest;

}

Here we set 3 Fastly debug headers :

resp.http.Fastly-Debug-Path : show which nodes the request used ( the delivery and fetch node)

resp.http.Fastly-Debug-TTL : Includes the TTL information

resp.Fastly-Debug-FF : If shielding is on

# add or append X-Served-By/X-Cache(-Hits)

{

if(!resp.http.X-Served-By) {

set resp.http.X-Served-By = server.identity;

} else {

set resp.http.X-Served-By = resp.http.X-Served-By ", " server.identity;

}

set resp.http.X-Cache = if(resp.http.X-Cache, resp.http.X-Cache ", ","") if(fastly_info.state ~ "HIT(?:-|\z)", "HIT", "MISS");

if(!resp.http.X-Cache-Hits) {

set resp.http.X-Cache-Hits = obj.hits;

} else {

set resp.http.X-Cache-Hits = resp.http.X-Cache-Hits ", " obj.hits;

}

}

« `X-Served-By« « just tells you which node the request passed through. The X cache is going to tell you whether or not it was a hit or a miss. When we looked in cache, did we already have it? Was it hit? If not, was it a miss?

NB : For the X cache response header are hit or miss even when a PASS behavior is called it gets reported out as a miss here on this X cache header

Keep in mind : Miss might be hiding a pass !

http.X-Cache-Hits: The delivery node will say how many times it’s had a hit for this particular object coming out of cache. Keep in mind the delivery nodes gets load balanced across the POP so the X cache hits cna be different.

if (req.http.X-Timer) {

set resp.http.X-Timer = req.http.X-Timer ",VE" time.elapsed.msec;

}

Pulls in the initial X timer value (from VCL_receive) and adds in the time elapsed in milliseconds

# VARY FIXUP

{

# remove before sending to client

set resp.http.Vary = regsub(resp.http.Vary, "Fastly-Vary-String, ", "");

if (resp.http.Vary ~ "^\s*$") {

unset resp.http.Vary;

}

}

if (resp.http.Vary ~ "^\s*$") : if the vary header contains nothing

unset resp.http.X-Varnish;

This is an internal diagnostic header, so it’s just being unset

# Pop the surrogate headers into the request object so we can reference them later

set req.http.Surrogate-Key = resp.http.Surrogate-Key;

set req.http.Surrogate-Control = resp.http.Surrogate-Control;

# If we are not forwarding or debugging unset the surrogate headers so they are not present in the response

if (!req.http.Fastly-FF && !req.http.Fastly-Debug) {

unset resp.http.Surrogate-Key;

unset resp.http.Surrogate-Control;

}

Here we put Surrogate-Key and Surrogate-Control from the response ( resp) to the request.

So it can be used by an other POP if this one is not the last one ( if there’s a shield after that one ).

What the doc says about http.Faslty-FF : this header as a simple mechanism to determine whether the current data center is acting as an edge or a shield

VCL deliver conclusion :

To sum it up, VCL deliver completes entirely before sending the response to the user. It occurs for each response separately. This is where you can include debugging details and session data specific to the user that shouldn’t be shared with others. It’s also your final opportunity to restart. The key transition calls here are return, deliver, and restart.

Takeaways

- Receive:

- Normalize input.

- Select a backend.

- Block at the edge.

- Redirect at the edge.

- Choose not to cache.

- Fetch:

- Set custom TTL.

- Modify headers from the backend.

- Configure rules for serving stale.

- Set default TTL.

- Deliver:

- Runs on every response.

- Adds debugging information.

- Handles user-specific session data.

- Last opportunity to restart.