12/03/2024

What’s request collapsing ?

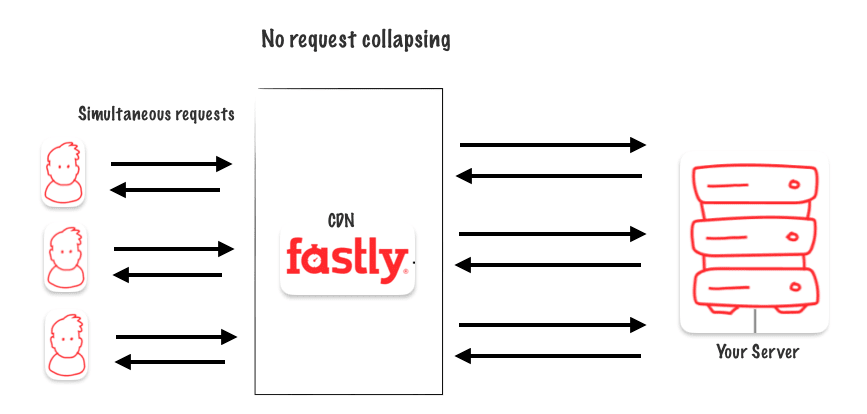

Concept of request collapsing in the context of a cache system refers to the scenario where multiple identical requests for the same data are received by the cache system simultaneously or within a short period of time. Instead of processing each request separately, the cache system can collapse or combine these identical requests into a single request, thus reducing the load on the backend system and improving overall efficiency.

The goal is to guarantee that only one request for an object will go to origin, instead of many. With Faslty, Request Collapsing is enabled by default for GET/HEAD requests. It is disabled otherwise.

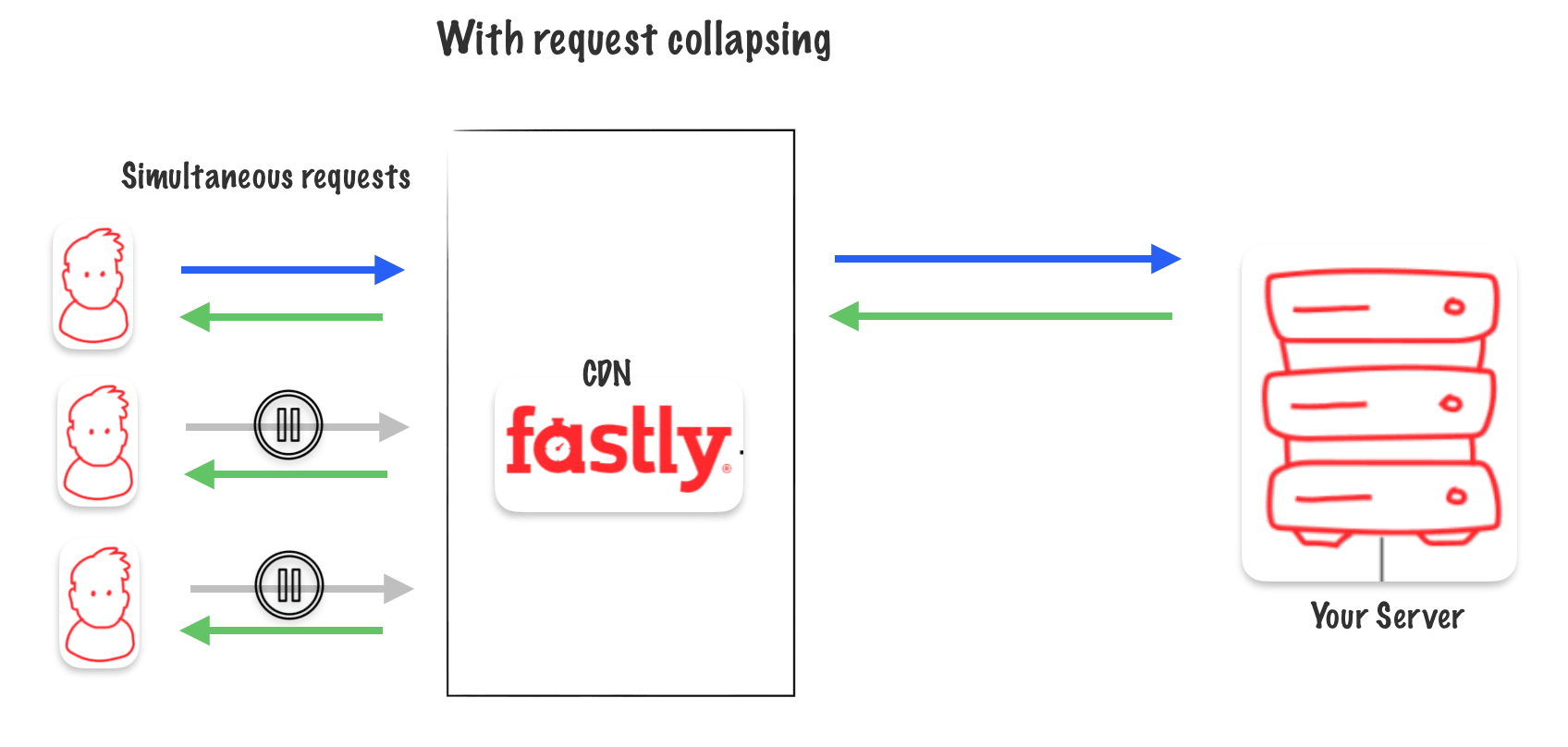

Request collapsing for a cacheable object

In request collapsing, when many requests come in at once, the system chooses one main request (called the « champion request ») to get the data from the original source and store it in the cache. Other requests are then paused until the cache is updated with the data. This helps manage the workload efficiently.

In request collapsing, when many requests come in at once, the system chooses one main request (called the « champion request ») to get the data from the original source and store it in the cache. Other requests are then paused until the cache is updated with the data. This helps manage the workload efficiently.

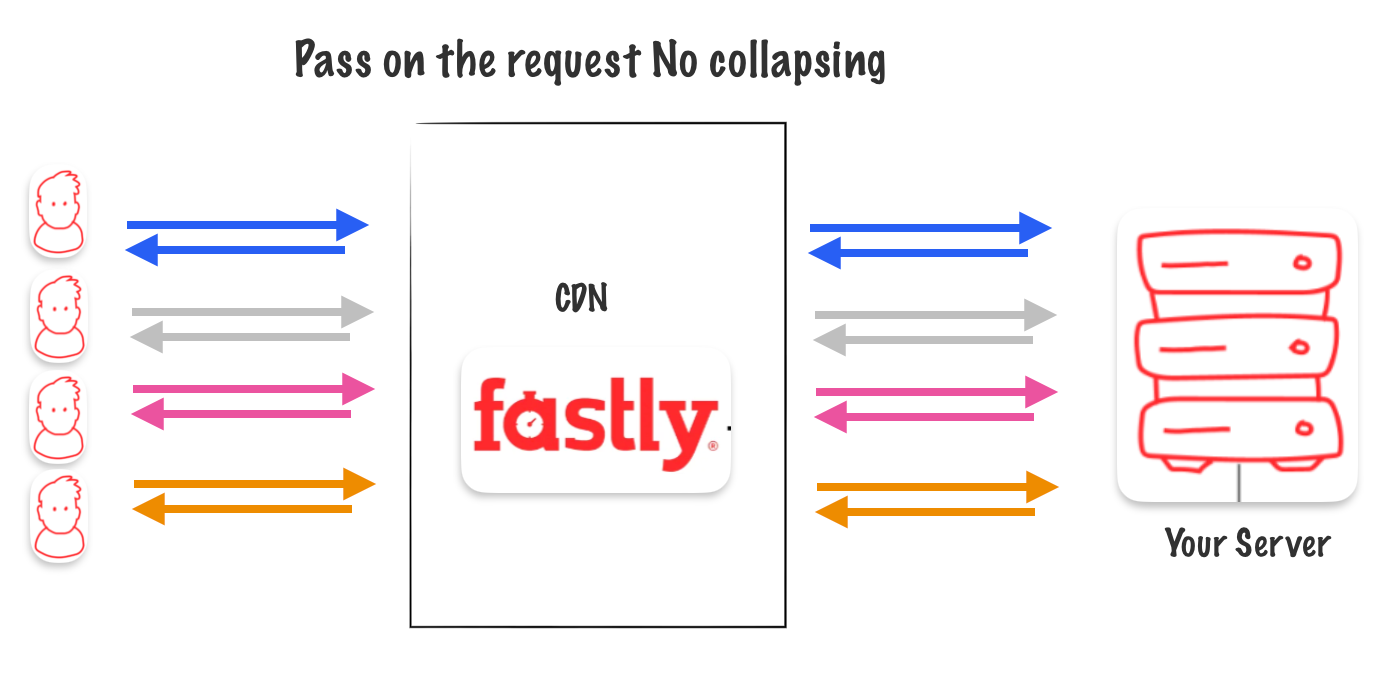

PASS Called on the request

You can change how the cache system works by turning off request collapsing for certain types of requests. When this happens, all requests are sent directly to the original source instead of being combined.

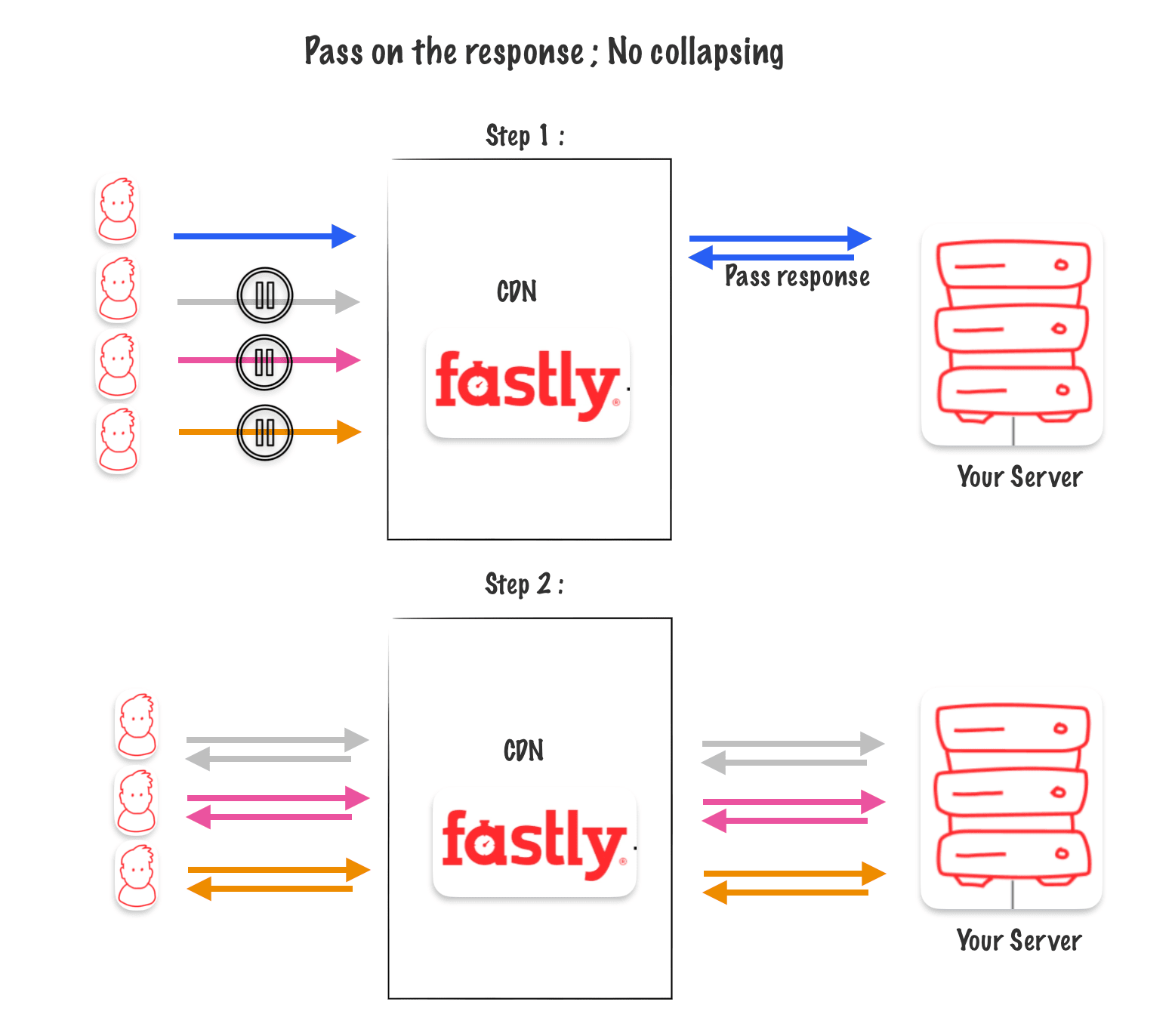

PASS Called on the response

If you choose to bypass the cache when responding (using a header or setting a cookie, for example), the waiting requests are reactivated after the first response. However, this method isn’t as efficient as bypassing the cache when requesting data because we have to wait for the response to receive the final instructions.

Hash key for pass on the reponse

When you pass on a response, the hash key for that request is marked with a « PASS » value for the next 120 seconds. This means that for the next 120 seconds, all requests with the same hash key will also pass through the cache.

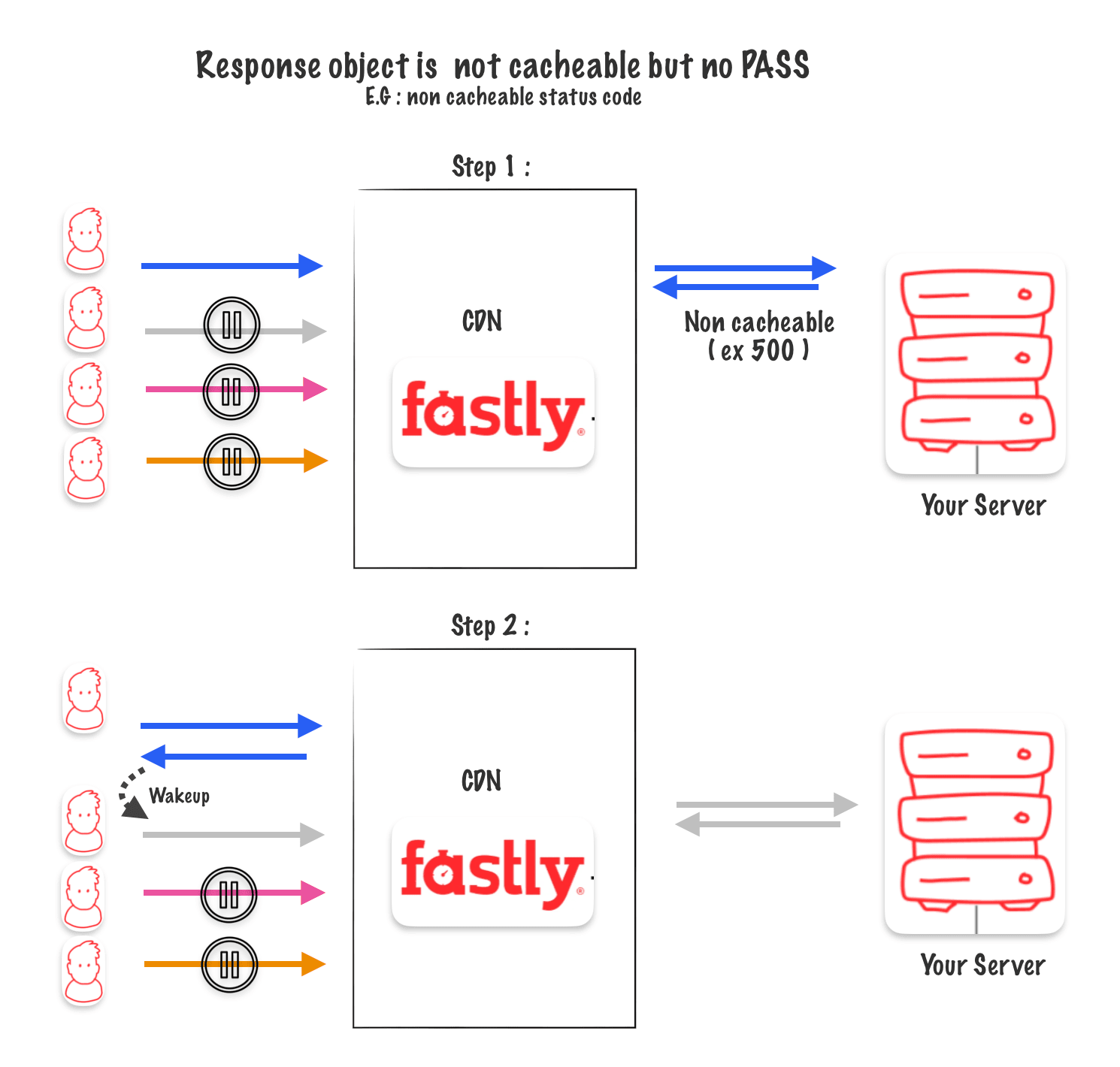

Response object is not cacheable but no PASS

If neither a « PASS » is called on the request nor on the response, it’s possible to receive an uncacheable response from the origin.

For example, if your server crashes and sends back a 500 error. In this case, the champion request gets a 500 response, and then the next request is triggered. Hopefully, the second one receives a response from the origin that isn’t a 500 error.

Takeaways

- For Cacheable objects, Request Collapsing collapses many backend request into one.

- PASSing on the Request disables Request Collapsing.

- A non-cacheable response, will sequentially awaken requests to the backend.

- A PASSed Response, awakens all pending requests and sends them to the backend.

- A PASSed Response triggers a