25/02/2024

What is edge computing, and what are its performance and advantages compared to the more traditional way of deploying web apps on conventional cloud platforms?

In a typical project, your web app is typically served from a single location, often the one you selected during project registration with your cloud provider—be it London, Paris, or the West Coast of the USA.

However, if your app has users worldwide, serving it from just one location is suboptimal. Despite advancements in fast networks like 5G, data cannot travel faster than the speed of light. Thus, having points closer to your users to serve your data will always be advantageous!

Distribute your app logic / around the world

Developers have long been familiar with the concept of getting closer to users by utilizing Content Delivery Networks (CDNs) to efficiently serve static files like images, JavaScript, and CSS…

However, distributing app logic poses a different challenge, as it typically remains hosted where the backend of the application resides. Consequently, for users located farther from this backend, the user experience may suffer from increased latency.

This is where edge computing emerges as a potential game changer. With edge computing, developers can execute crucial application logic at the edge of the network. Thus, beyond merely serving static files, critical aspects of application logic can be accessed and executed much faster, significantly enhancing the overall user experience.

How Edge computing works ?

To deploy your app logic at the edge, you utilize serverless functions, which are deployed and hosted at multiple points across the globe, ready to execute when needed.

If you’ve ever worked with serverless functions, you’re likely familiar with the cold start challenge. One of the key benefits of serverless functions is that you only pay for the time they’re running. However, this also means that when a function isn’t actively running, it’s « cold » and needs to be reactivated when triggered, leading to longer initial response times for users.

Given that the primary goal of edge computing is to deliver rapid response times, dealing with cold starts becomes a critical concern. To address this issue, code in edge computing environments is executed using the Chrome V8 engine. This engine, commonly known as V8, enables the execution of JavaScript code both within and outside of a browser, facilitating server-side scripting. High-performance edge computing services like Fastly rely on V8 and WebAssembly to achieve low-latency execution. WebAssembly code is closer to the machine code and requires less interpretation, leading to faster execution.

Limitation

However, there’s a limitation: the code you deploy must be compiled to be under 1MB in size to ensure optimal performance. This constraint means you can’t simply install all npm dependencies without consideration. Often, you’ll need to bundle dependencies using tools like Webpack to meet this requirement.

Fortunately, new JavaScript frameworks like Nuxt or Next are emerging, offering the capability to run parts of the logic at the edge. This trend is making more and more applications compatible with edge computing, paving the way for improved performance and responsiveness across diverse use cases.

How performant is edge computing ?

In this section of the article, we’ll examine the contrast between hosting a Node.js application in France using OVH and deploying the same application on the Fastly Edge Compute platform.

The code for the application is relatively straightforward; it generates a random quote tailored from professional golfers.

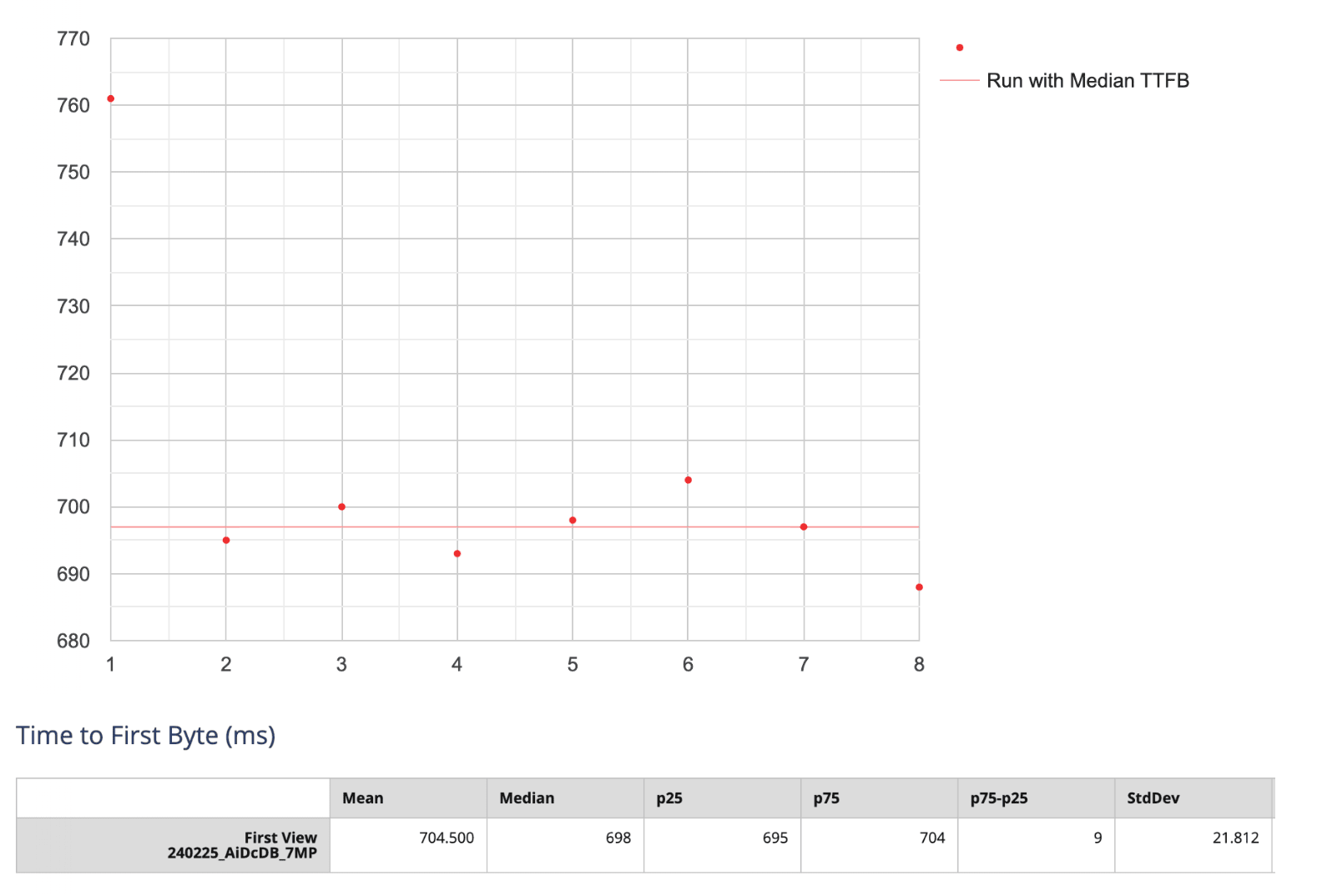

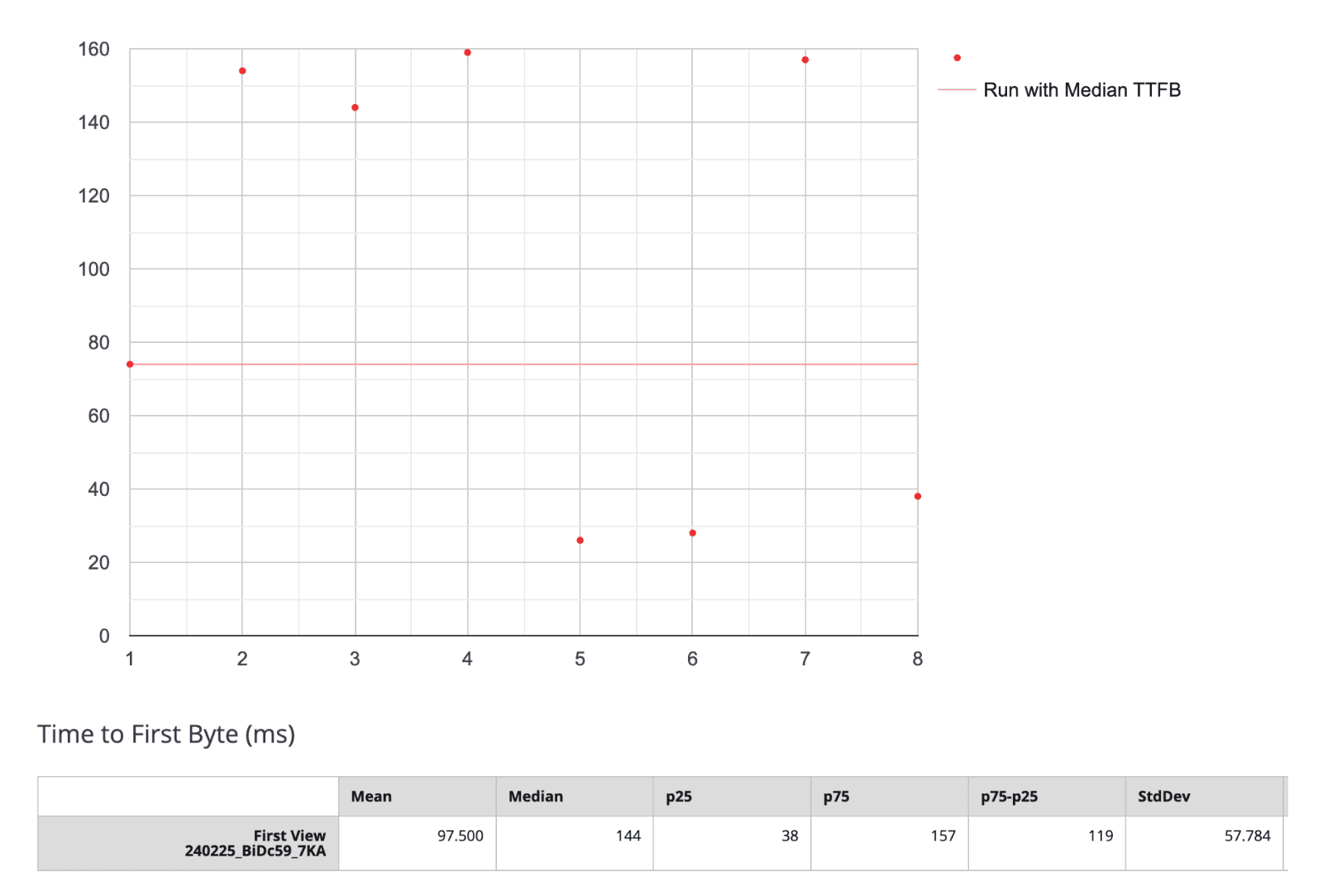

Let’s analyze the speed of both applications. For testing, I utilized WebPageTest and conducted 8 consecutive tests from an EC2 instance located in Osaka, Japan, without any traffic shaping applied.

The metric chosen to evaluate the performance of the applications is the Time To First Byte (TTFB). TTFB measures the duration it takes for the server to send the first byte of the response, providing insight into how quickly the application logic is executed.

The Node.js App hosted in France ( Graveline – North of France ) (OVH)

With a median TTFB of 698ms in this test, it’s unsurprising given the geographical distance between Paris and Osaka, which is approximately 9,200 kilometers. The latency introduced by this distance naturally contributes to the observed TTFB.

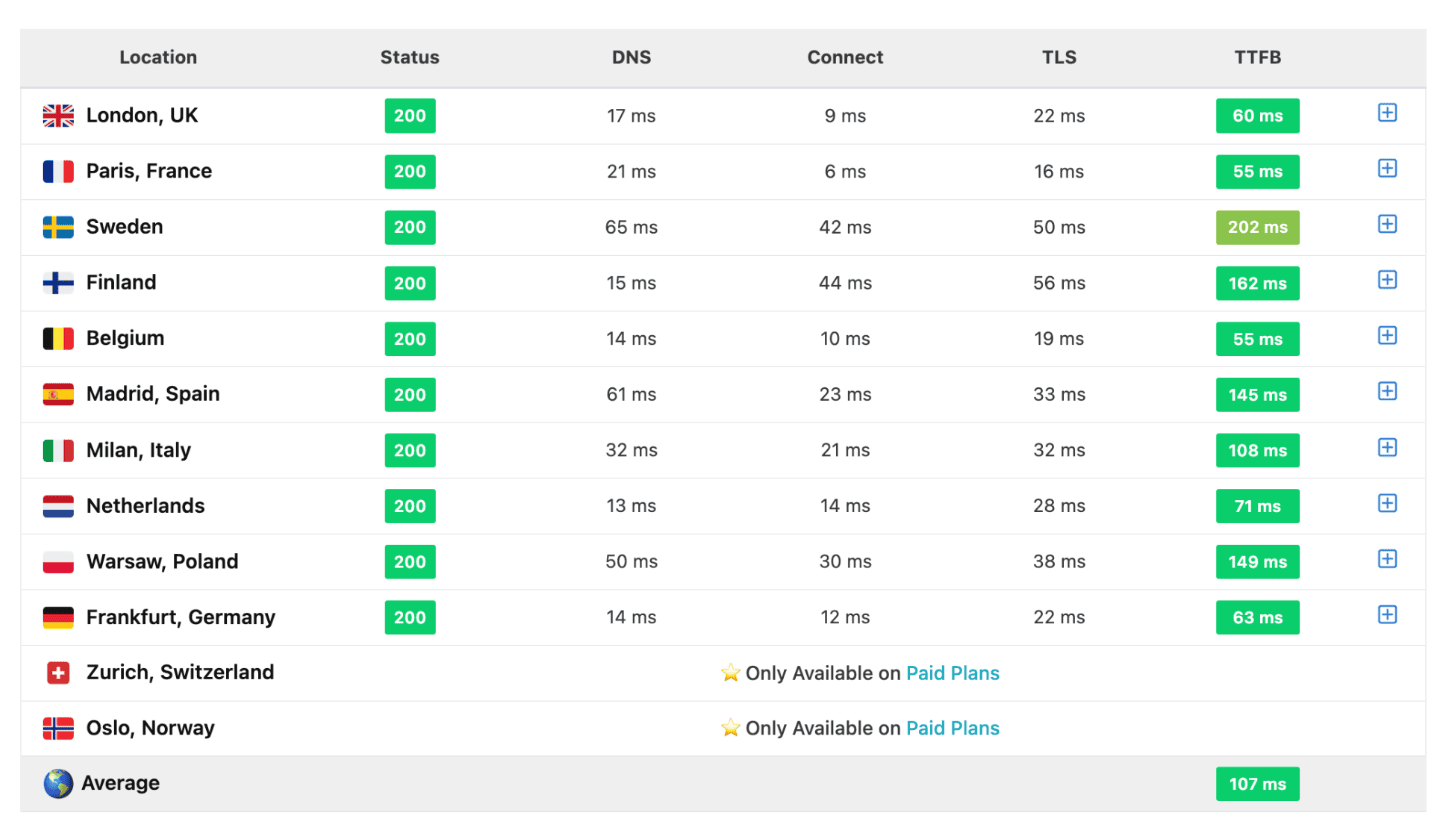

My code, hosted in France, didn’t perform well in Japan. Let’s explore its response time across various locations worldwide.

To conduct the tests, I utilized speedvitals.com, which enables testing the TTFB across different regions worldwide. It’s expected to observe a deterioration in performance as we move farther away from Europe.

Europe

Average Time To First Byte : 107ms

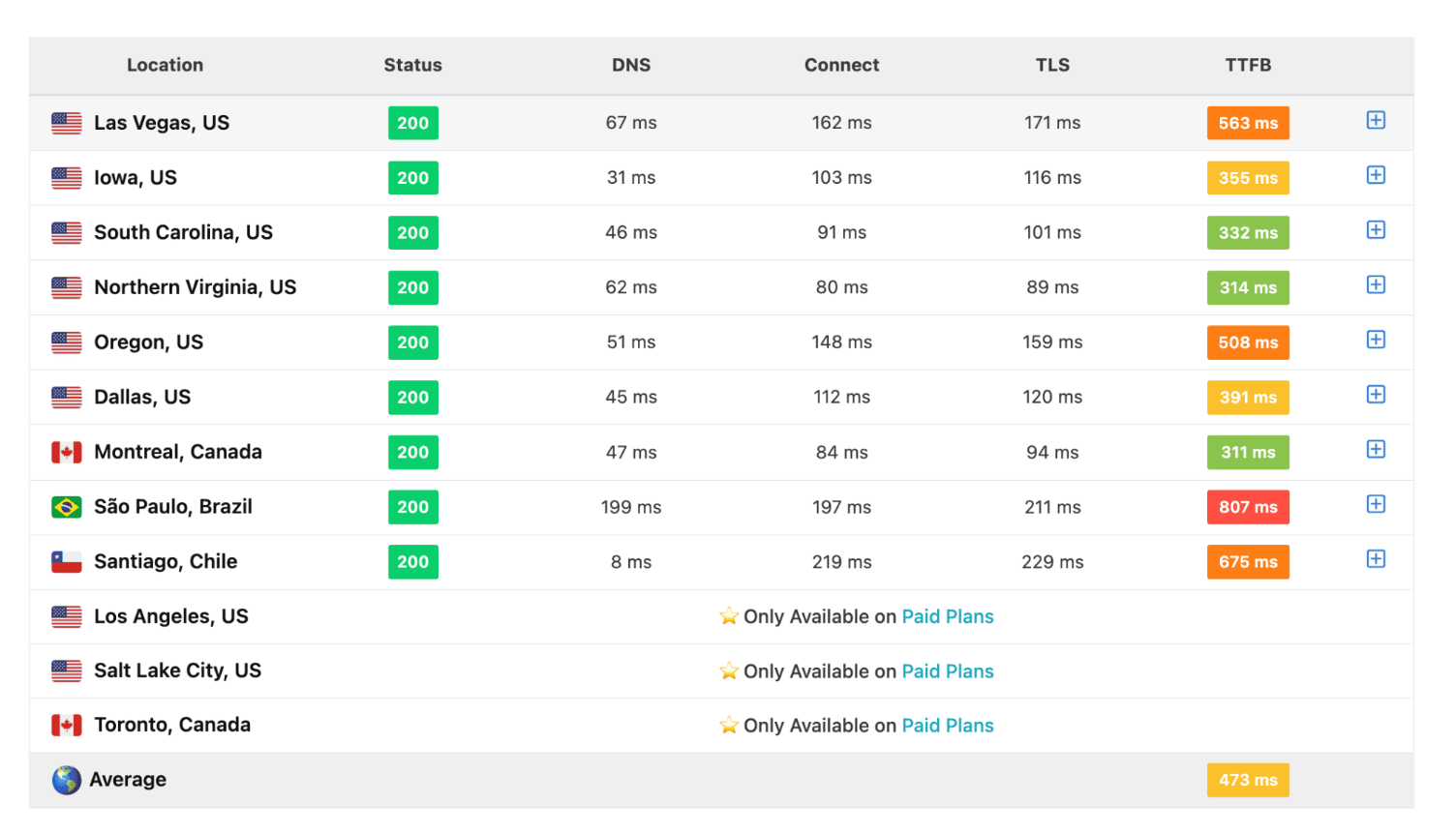

Americas

Average Time To First Byte : 473ms

Asia

Average Time To First Byte : 859ms

As evident in Sydney, it took over a second to receive the response. From a user perspective, this delay is exceedingly slow and undoubtedly will have a detrimental impact on site revenues.

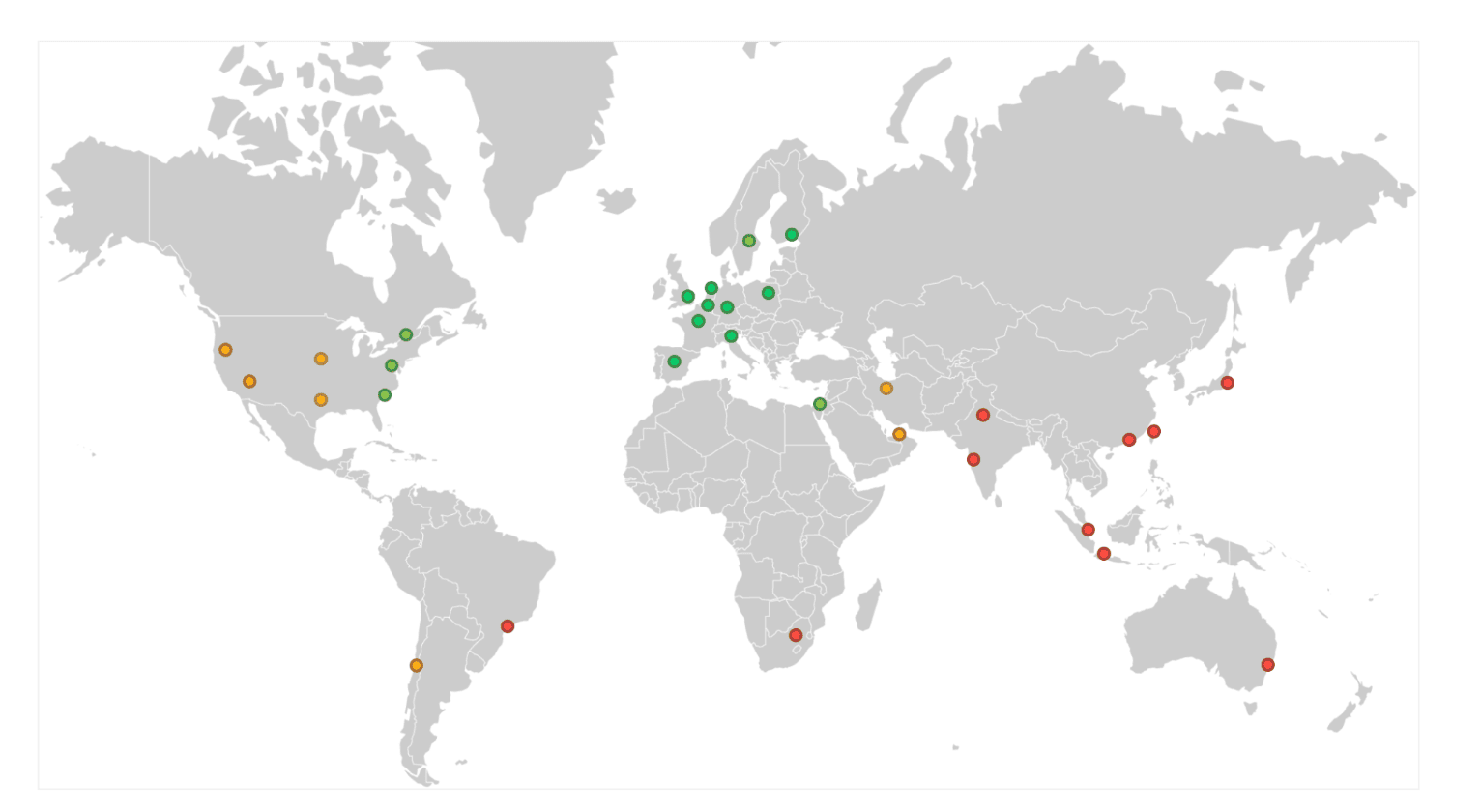

Performance map

Unsurprisingly, as we moved farther from Europe, the performance continued to degrade.

The Node.js App hosted at the Edge with Fastly Compute

Now, let’s examine how the same code performs at the Edge. I deployed it using Fastly Compute to distribute it worldwide. Let’s review the obtained metrics. The application deployed at the Edge has a median TTFB of 144m, that is roughly 4.8 times quicker than the one hosted in France when accessed from Osaka, Japan

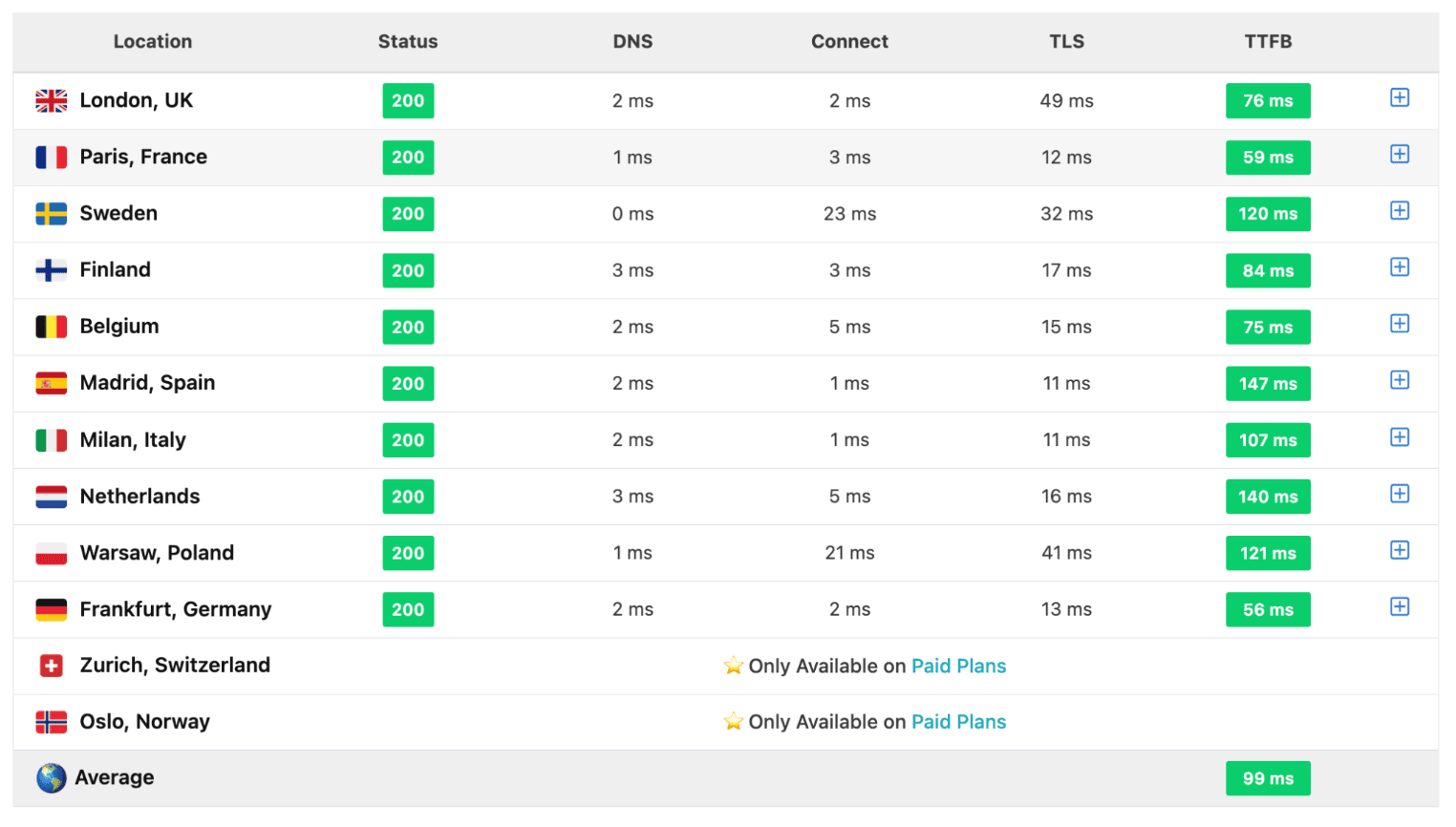

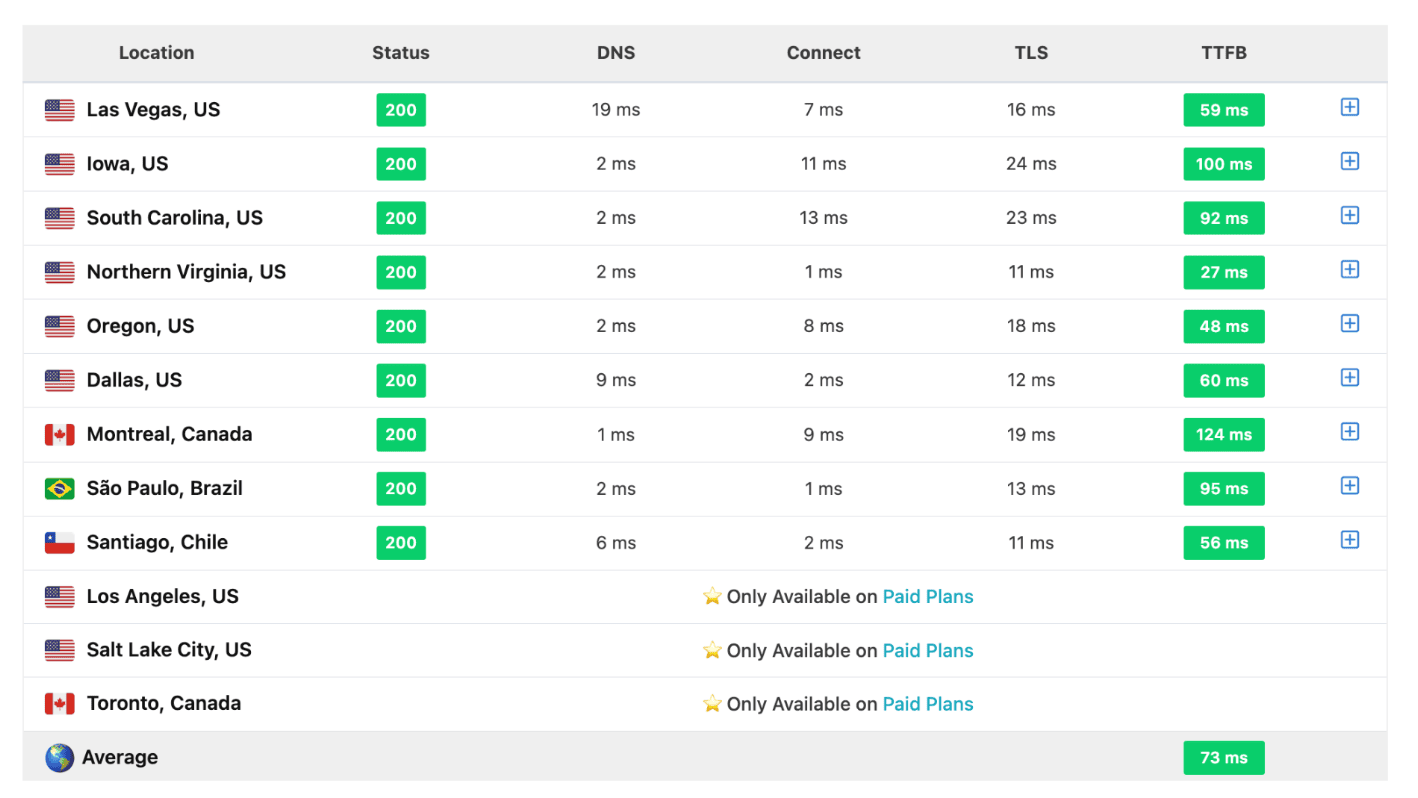

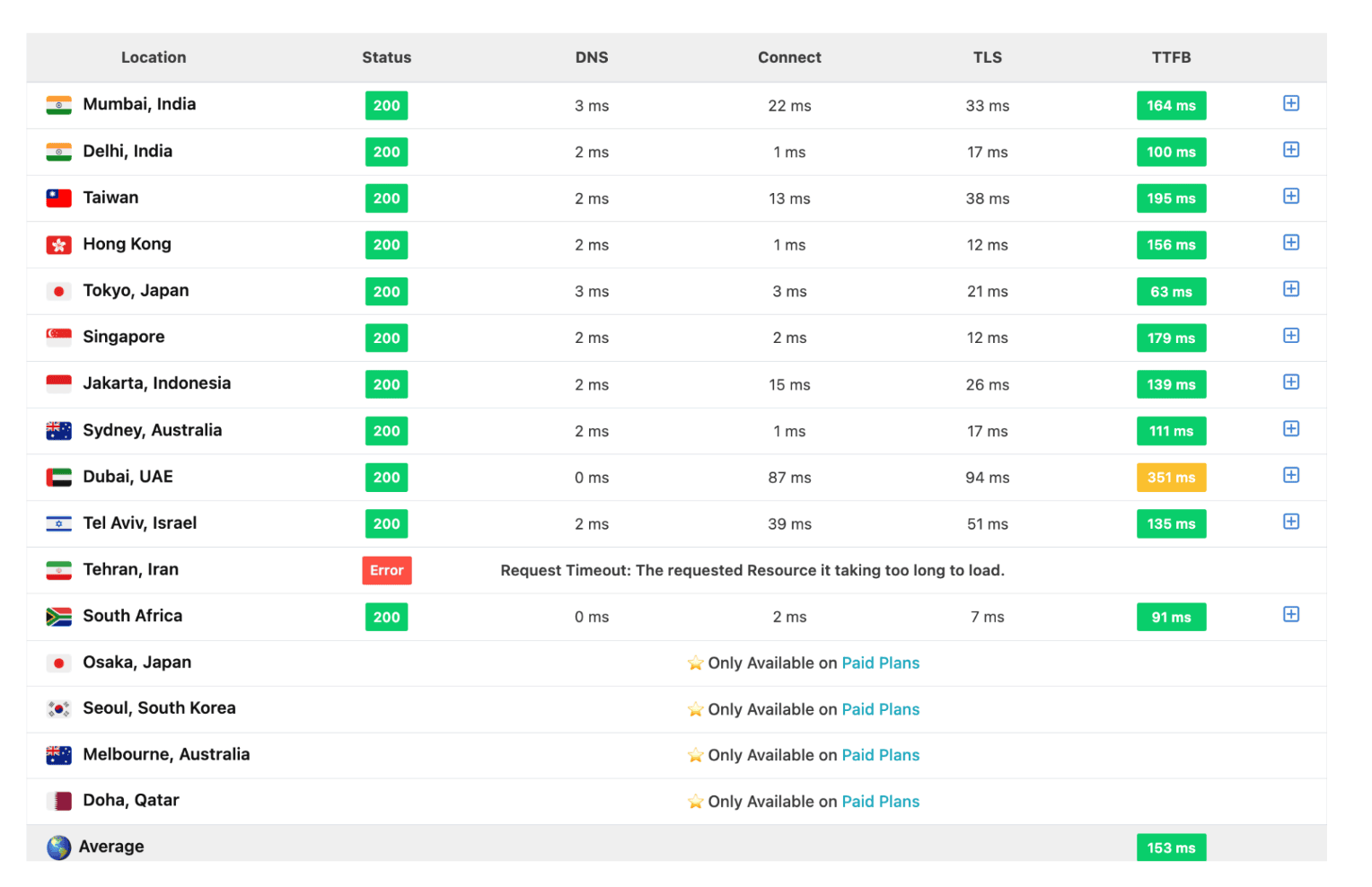

Now, let’s examine the response times across the globe for the application deployed on Fastly Compute.

Europe

In Europe Fastly compute is 7% faster in europe VS OVH ( 99ms VS 107ms)

Americas

In Americas Faslty compute is 84.55% faster (73ms vs 473ms )

Asia

In Asia Faslty compute is 82.22% faster (153ms vs 859ms )

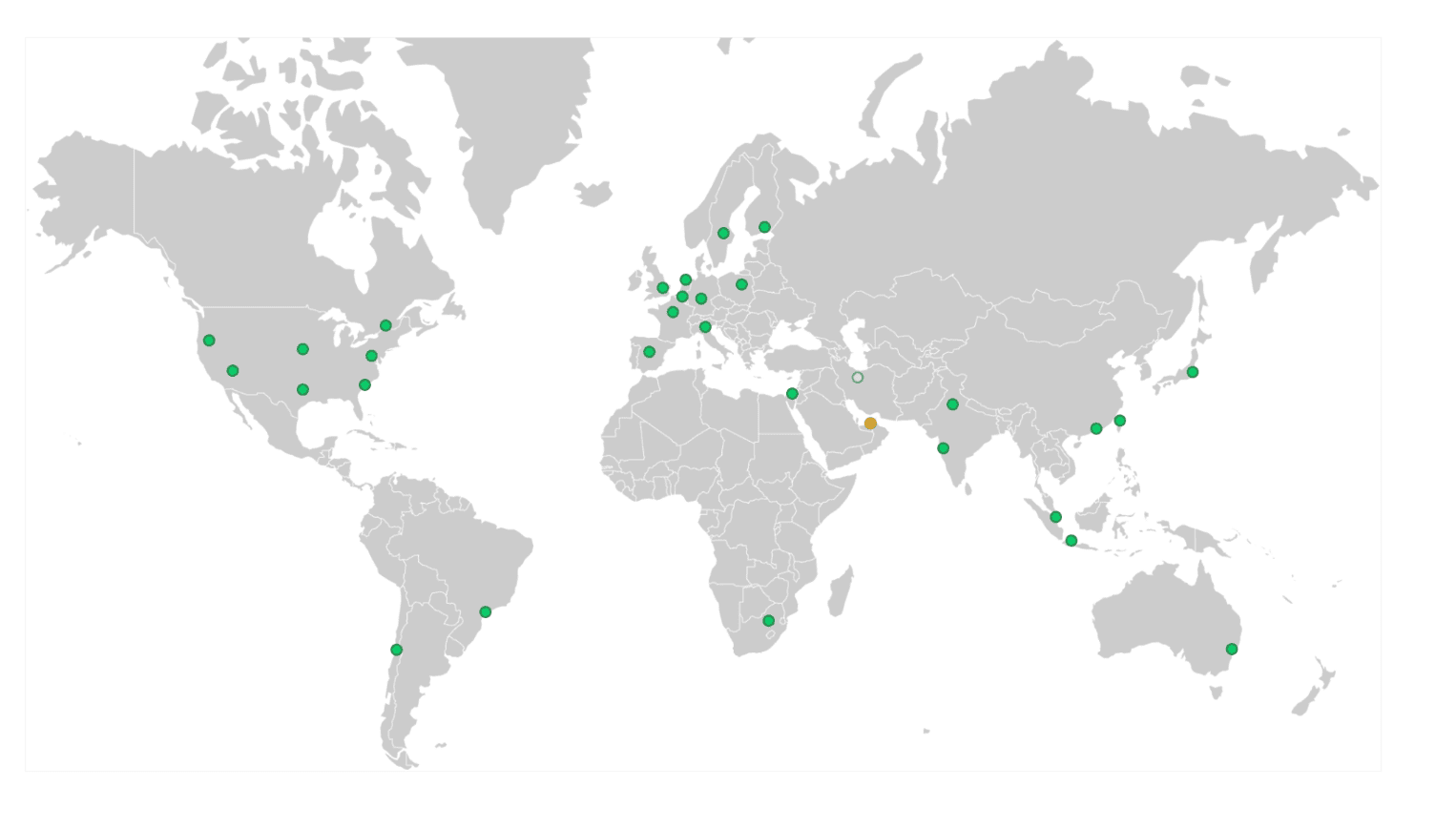

Performance map

As evident from the map, the performance of the application deployed on Fastly Compute is exceptional across the globe.

Conclusion

To sum up, it’s clear that where you host your web app matters a lot, especially if you have users all around the world. Serving your app from just one place can make it slow for people who are far away. But with edge computing, things can change for the better.

Edge computing lets us run important parts of our app closer to where users are. This means faster loading times and happier users, no matter where they are. Looking at our comparison between hosting in France with OVH and using Fastly Edge Compute, it’s obvious that edge computing makes a big difference. The time it takes for the app to respond is much quicker, especially for users in far-off places like Asia.

So, in simple terms, edge computing is like a superpower for web developers. It helps us make our apps faster and more reliable for everyone, no matter where they are in the world. And as more developers start using it, we can expect even more improvements in how our apps perform.