17/12/2024

This article organizes step-by-step instructions for deploying, exposing, and managing Kubernetes workloads, handling connectivity issues, and ensuring self-healing deployments with K3s. These notes represent the of my personal experiments with K3s.

Install K3s

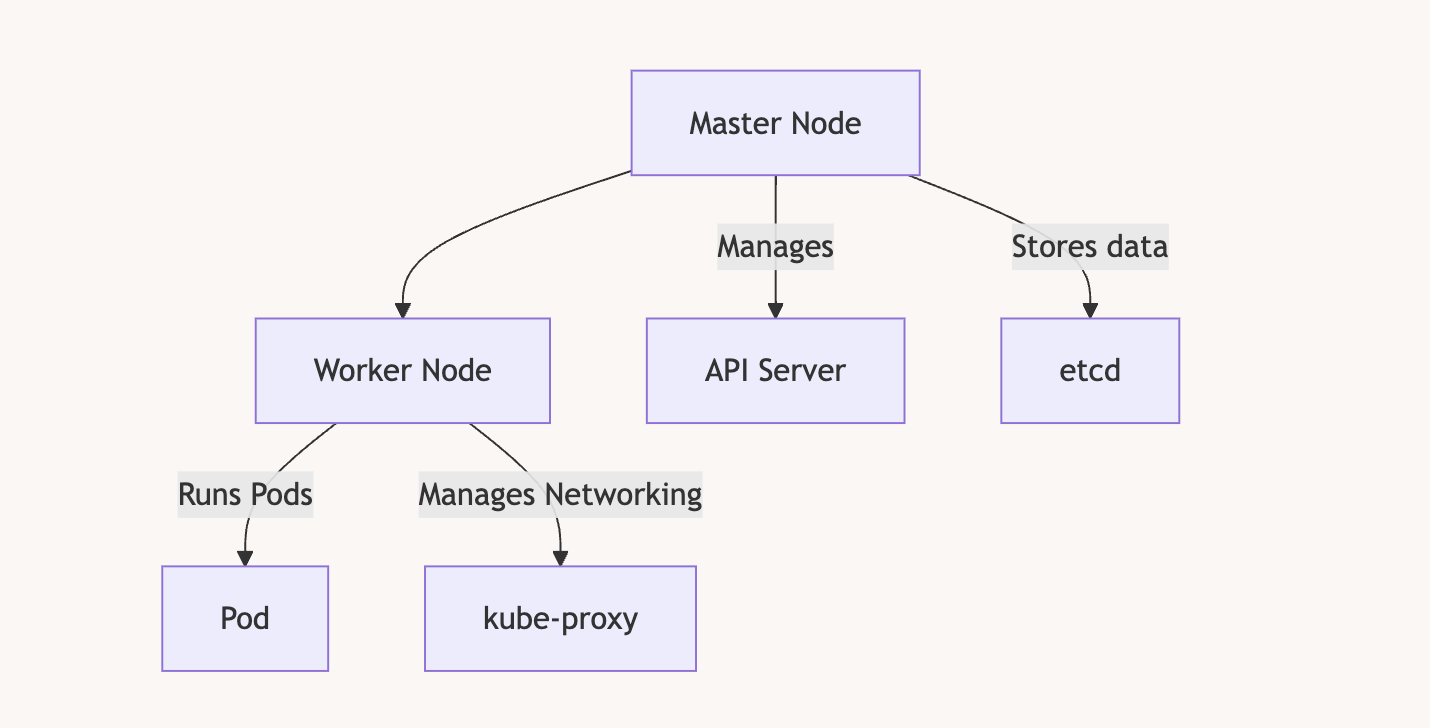

To install K3S, you need at least 2 VMs. In my case, I decided to use Debian VMs on Proxmox. The first VM will be the master node, and the second will be the worker node. The master node orchestrates the cluster and distributes the load across one or more worker nodes. As your application grows and requires more machines, you can simply add more worker nodes to the cluster.

Install the master node

curl -sfL https://get.k3s.io | sh -

Get the token

cat /var/lib/rancher/k3s/server/token

It will return something like

root@k8masterNode:~# cat /var/lib/rancher/k3s/server/token

K10ee9c18dac933cab0bdbd1c64ebece61a4fa7ad60fce2515a5fcfe19032edd707::server:64ee8a3fec9c3d1db6c2ab0fc40f8996

Get the IP of the machine

root@k8masterNode:~# ip addr show | grep 192

inet 192.168.1.67/24 brd 192.168.1.255 scope global dynamic ens18

Join the cluster from the worker node

NB : Be sure the worker node has a different hostname otherwise it will not work

If the VM has been cloned then run : sudo hostnamectl set-hostname <new-hostname>

curl -sfL https://get.k3s.io | K3S_URL=https://<server-ip>:6443 K3S_TOKEN=<token> sh -

curl -sfL https://get.k3s.io | K3S_URL=https://192.168.1.67:6443 K3S_TOKEN="K10ee9c18dac933cab0bdbd1c64ebece61a4fa7ad60fce2515a5fcfe19032edd707::server:64ee8a3fec9c3d1db6c2ab0fc40f8996" sh -

Creating and Exposing Deployments

Create a Deployment

kubectl create deployment nginx --image=nginx

Expose the Deployment

kubectl expose deployment nginx --type=NodePort --port=80

Verify the service:

kubectl get svc

Expected output:

NAME TYPE CLUSTER-IP PORT(S) AGE

nginx NodePort 10.43.212.89 80:30548/TCP 6s

Verify the pods:

kubectl get pods -o wide

Example:

NAME READY STATUS IP NODE

nginx-bf5d5cf98-knd5t 1/1 Running 10.42.1.3 workernode

Access the Nginx App:

curl http://<CLUSTER-IP>

curl http://<MASTER-IP>:<NODEPORT>

Example:

curl http://192.168.1.49

Or with the port like so (we deployed on port 80)

curl http://192.168.1.49:80

Managing a Deployment

Remove a Deployment

Delete the service:

kubectl delete service nginx

Delete the deployment :

kubectl delete deployment nginx

Confirm deletion:

kubectl get deployments

kubectl get svc

Building and Pushing Custom Docker Images

Build the Docker Image

Ensure your app is ready for deployment:

docker build -t node-express-app .

Login to DockerHub

docker login

Tag and Push the Image

docker tag node-express-app antoinebr/node-express-app:v1

docker push antoinebr/node-express-app:v1

Deploying Custom Applications

Create a Deployment YAML

Save as node-express-deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: node-express-app

spec:

replicas: 6

selector:

matchLabels:

app: node-express-app

template:

metadata:

labels:

app: node-express-app

spec:

containers:

- name: node-express-app

image: antoinebr/node-express-app:v1

ports:

- containerPort: 3000

Apply the Deployment

kubectl apply -f node-express-deployment.yaml

Expose the Deployment

kubectl expose deployment node-express-app --port=80 --target-port=3000 --type=NodePort

Get App IP

kubectl get svc node-express-app

You will get something like :

root@k8masterNode:~# kubectl get svc node-express-app

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

node-express-app NodePort 10.43.239.152 <none> 80:31738/TCP 3m33s

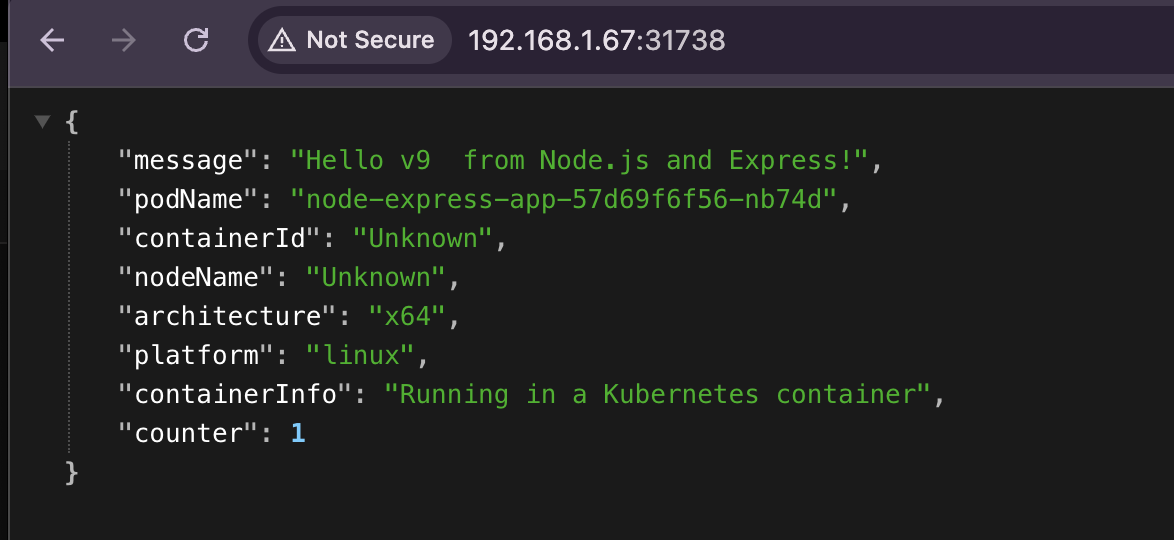

Access the App:

curl http://<MASTER-IP>:<NODEPORT>

In my case :

curl http://192.168.1.67:31738

NB: To remove that deployement kubectl delete deployment node-express-app

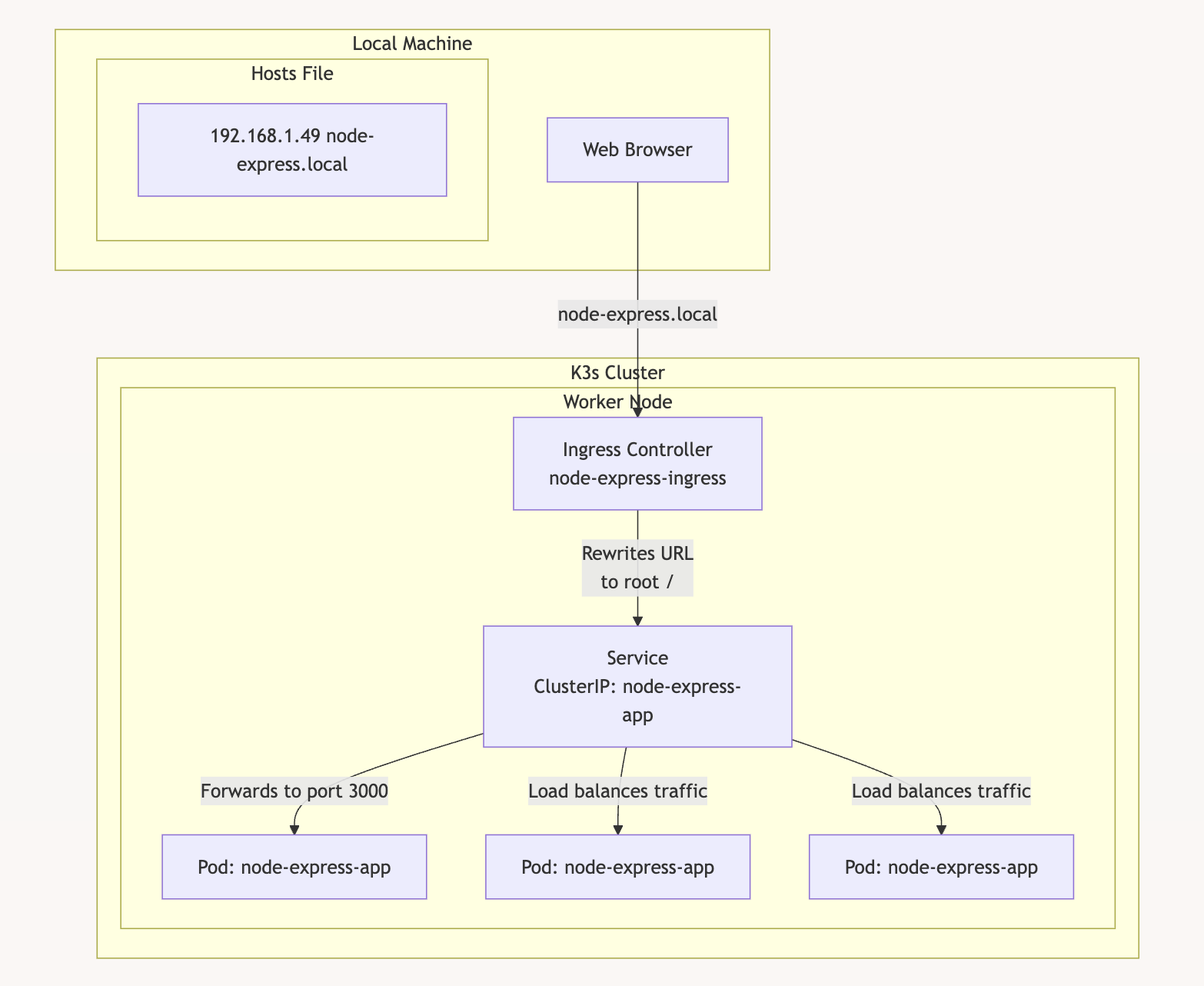

Using an Ingress Controller

Deployment and Service Configurations

Create deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: node-express-app

spec:

replicas: 3

selector:

matchLabels:

app: node-express-app

template:

metadata:

labels:

app: node-express-app

spec:

containers:

- name: node-express-app

image: antoinebr/node-express-app:v1

ports:

- containerPort: 3000

Create service.yaml:

apiVersion: v1

kind: Service

metadata:

name: node-express-app

spec:

selector:

app: node-express-app

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: ClusterIP

Create ingress.yaml:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: node-express-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: node-express.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: node-express-app

port:

number: 80

Apply Configurations

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

kubectl apply -f ingress.yaml

Set Hostname in Local System

Add to /etc/hosts:

192.168.1.49 node-express.local

Here’s a diagram of the setup

Handling Worker Node Connectivity Issues

Verify Node Status

kubectl get nodes

If workernode shows NotReady, check connectivity:

curl -k https://<MASTER-IP>:6443

Expected output:

{

"status": "Failure",

"message": "Unauthorized",

"reason": "Unauthorized",

"code": 401

}

Troubleshoot Worker Token

Verify the token:

cat /var/lib/rancher/k3s/server/token

Re-set the token:

echo "THE_TOKEN" | tee /var/lib/rancher/k3s/agent/token

Restart K3s Agent

sudo systemctl daemon-reload

sudo systemctl restart k3s-agent.service

Check Nodes

kubectl get nodes

Restarting K3s Service

Common Errors

- Port 6444 already in use:

sudo lsof -i :6444 kill -9 <PID> - Invalid server token:

Ensure the correct token is in:

/var/lib/rancher/k3s/server/token

Restart K3s

sudo systemctl restart k3s

Kubernetes Crash Handling

Kubernetes automatically restarts failed pods.

Check Pod Status:

kubectl get pods

Example output:

NAME READY STATUS RESTARTS AGE

node-express-app-8477686cf7-6fmvz 1/1 Running 0 30m

If a pod crashes, Kubernetes will reschedule it to maintain the desired replica count.