11/03/2024

Imagine if your web applications could run faster and smoother without much effort. That's where caching comes into play. Among the various caching solutions available, Varnish-style caching stands out as a powerful tool for optimizing web application performance. In this guide, we'll take a simple approach to understanding how caching works behind the scenes, and how Varnish can turbocharge your web applications. Whether you're new to the concept of caching or looking to enhance your knowledge, this guide will equip you with the essentials.

What’s caching ?

In a web application, caching works like a high-speed memory that stores frequently accessed data or content. When a user requests information from the application, instead of fetching it from the original source every time, the application checks if it’s already stored in the cache. If it is, the data is retrieved quickly from the cache, significantly reducing the time needed to load the page or fulfill the request. This enhances the application’s speed and responsiveness, ultimately providing a smoother user experience.

Cache Terminology Explained

Understanding key terms like HIT, MISS, and PASS is essential to grasp how caching systems operate:

HIT:

When a requested object is found in the cache, it results in a HIT. In simpler terms, this means the cache already has the data stored and can swiftly deliver it to the user, bypassing the need to retrieve it from the original source.

MISS:

Conversely, a MISS occurs when the requested object is not present in the cache. In this scenario, the caching system must retrieve the object from the origin server, leading to slightly longer response times. Objects that consistently result in MISSes may be deemed uncacheable due to their dynamic or infrequent nature.

PASS:

In certain cases, Fastly (or any caching system) may opt to bypass caching altogether for specific objects. When an object is marked for PASS, Fastly will always fetch it directly from the origin server, without attempting to store it in the cache. This ensures that the freshest version of the object is always delivered to the user, albeit at the cost of caching benefits.

Cache Metrics: Understanding Cache Hit Ratio and Cache Coverage

Cache Hit Ratio

Cache Hit Ratio: It’s the proportion of requests successfully served from the cache compared to the total requests, calculated as the number of cache hits divided by the sum of cache hits and misses. A higher ratio indicates more efficient cache usage, while a lower ratio suggests room for improvement in caching effectiveness.

HIT / (HIT + MISS)

Cache Coverage

Cache Coverage: This metric assesses the extent of the cache’s utilization by considering the total number of requests that either resulted in a cache hit or miss, divided by the sum of all requests, including those passed through without caching. In simpler terms, it measures how much of the overall workload is handled by the cache, indicating its effectiveness in caching content. A higher cache coverage implies a larger portion of requests being managed by the cache, thus maximizing its impact on performance optimization.

(HIT + MISS) / ( HIT + MISS + PASS )

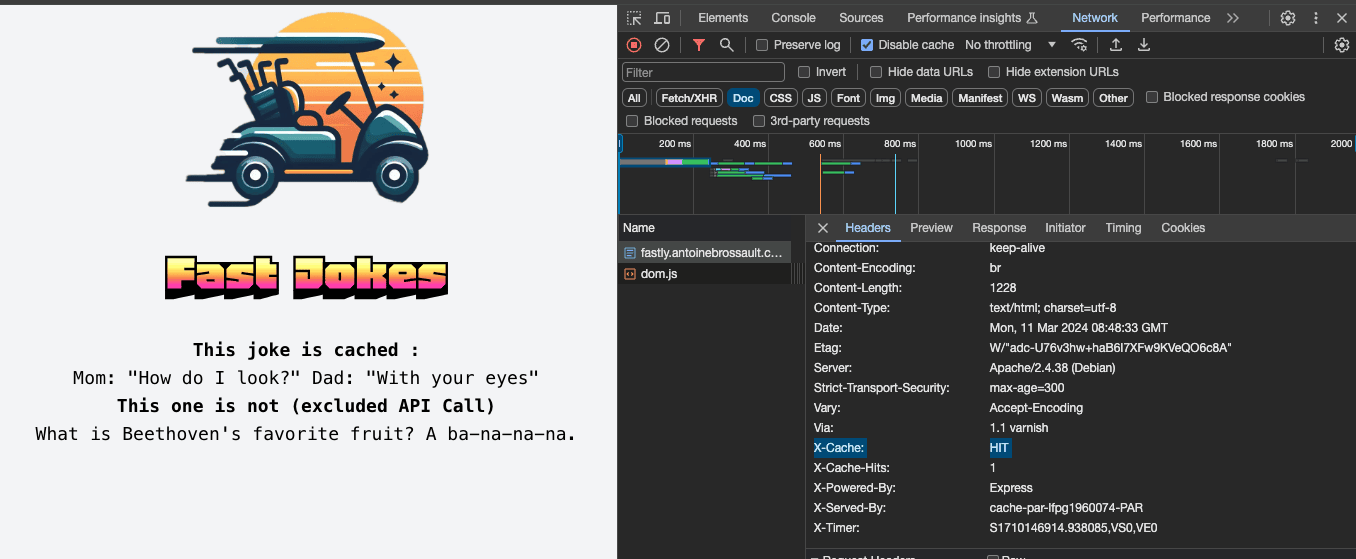

How to check if a response came from the cache ?

To find out if a request hit or missed the cache, just check the response header. For instance, in my example, if the « x-cache » key shows « HIT, » it means the response was fetched from the cache.

Manage the cache duration

The cache duration is calculated by Time-To-Live (TTL).

The TTL is not a header, but you can compute the TTL based on the headers used.

The Age header keeps increasing (you can see it in the gif). When the Age header’s value becomes greater than the TTL, it means the cache expires and gets invalidated.

How to keep track on the objects stored in the cache ?

How does a CDN like Fastly keep track of what’s in the cache? Well, on each node (and there are multiple nodes on a POP – Point of Presence), there’s a hash table that does the job.

This hash table generates a key for every request, using the host and URL. Whenever a response is cacheable, it’s added to this table. So, if you’re looking for something in the cache and it’s not in this table, it means it’s not in the cache.

So the host and URL are hashed like this :

{

"5678ab57f5aaad6c57ea5f0f0c49149976ed8d364108459f7b9c5fb6de3853d6" : "somewhere/in/the/cache.html"

}

How to use headers to control the cache :

You’ve got various headers to control caching in your response, determining whether to cache it and for how long.

Cache-Control:

This header is respected by all caches, including browsers. Here are some Cache-Control values you can use:

To explain clearly, those headers : Imagine your web server is a store and your visitors are customers. You can give instructions on how fresh their groceries need to be. Here’s how to do that with headers:

Cache-Control: s-maxage=

Cache-Control: max-age= (seconds): This is like saying « keep these groceries fresh for X seconds. » Browsers will use the stored version for that long before checking for a new one.

Cache-Control: max-age=

Cache-Control: s-maxage= (seconds): This is for special big warehouses (shared caches) that store groceries for many stores. It tells those warehouses how long to keep things fresh, overriding the regular max-age for them.

Cache-Control: private: This is like saying « these groceries are for this

Cache-Control: private

This is like saying « these groceries are for this customer only, don’t share them with others. » Browsers will store them but not share them with other websites you visit.

Cache-Control: no-cache

This is like saying « don’t store these groceries at all, always check for fresh ones. » Browsers will never store this type of item.

Cache-Control: no-store

This is stricter than nocache. It’s like saying « don’t even keep a shopping list for these groceries, always get new ones. » Browsers won’t store anything related to this item.

Surrogate-Control:

Another header you can use to control the cache is the Surrogate-Control.

This header is respected by caching servers but not browsers.

The values you can set for Surrogate-Control include:

max-age= (seconds)

Server will use the stored version for that long before checking for a new one.

Expires

Expires is a header that any cache, including browsers, respects. It’s only utilized if neither « Cache-Control »

« Surrogate-Control » headers are present in the response. An example of the « Expires » header would be:

Expires: Wed, 21 Oct 2026 07:28:00 GMT

This indicates that the cached response will expire on the specified date and time.

How to not cache (PASS) ?

When you don’t want to cache something, you can use the Pass behavior. You can choose to pass on either the request or the response.

If it’s a Pass, then the response won’t be stored in the hash table we talked about earlier.

Default request that will PASS

By default, any request that isn’t a GET or HEAD method will pass.

The HEAD method is similar to the GET method in that it requests the headers of a resource from the server, but it doesn’t request the actual content of the resource. It’s often used to check if a resource exists,

const axios = require('axios');

async function makeHeadRequest() {

const response = await axios.head('https://example.com');

console.log('Headers:', response.headers);

}

With Fastly, you have the option to pass on either the request or the response. Passing on the request is faster because you immediately declare that this request should PASS, rather than needing to check with the caching system on the backend to make that determination.

When you choose to pass on the request, it means the response won’t be stored in the hashtable because it’s marked as non-cacheable.

Default response that will PASS

By default, all responses are cached.

Reponses will not be cached if your origin server adds a Cache-Control: private header or a Set-Cookie header to the response.

A pass response is store in the hashtable as PASS

When a response is set to pass, it means that it won’t be stored in the cache like other responses. However, Fastly still keeps track of these responses by adding them to the hash table with a special « PASS » value. This allows Fastly to quickly identify and handle such responses without going through the usual caching mechanisms.

// https://fastly.antoinebrossault.com/pass

{

"978032bc707b66394c02b71d0fa70918c2906634c0d26d22c8f19e7754285054" : "PASS"

}

Conclusion

- Fastly is another caching layer between the client and the content at origin.

- Each request generates a hash key.

- If the hash key matches with an object in the hash table, then it’s a HIT and will be served from cache.

- Some status codes will NOT cache by default.

- Cache-control headers determine the TTL and if an object will not cache.

- Using « Pass » will cause a response to NOT cache.

- You can choose to pass on the request and/or the response.