14/06/2024

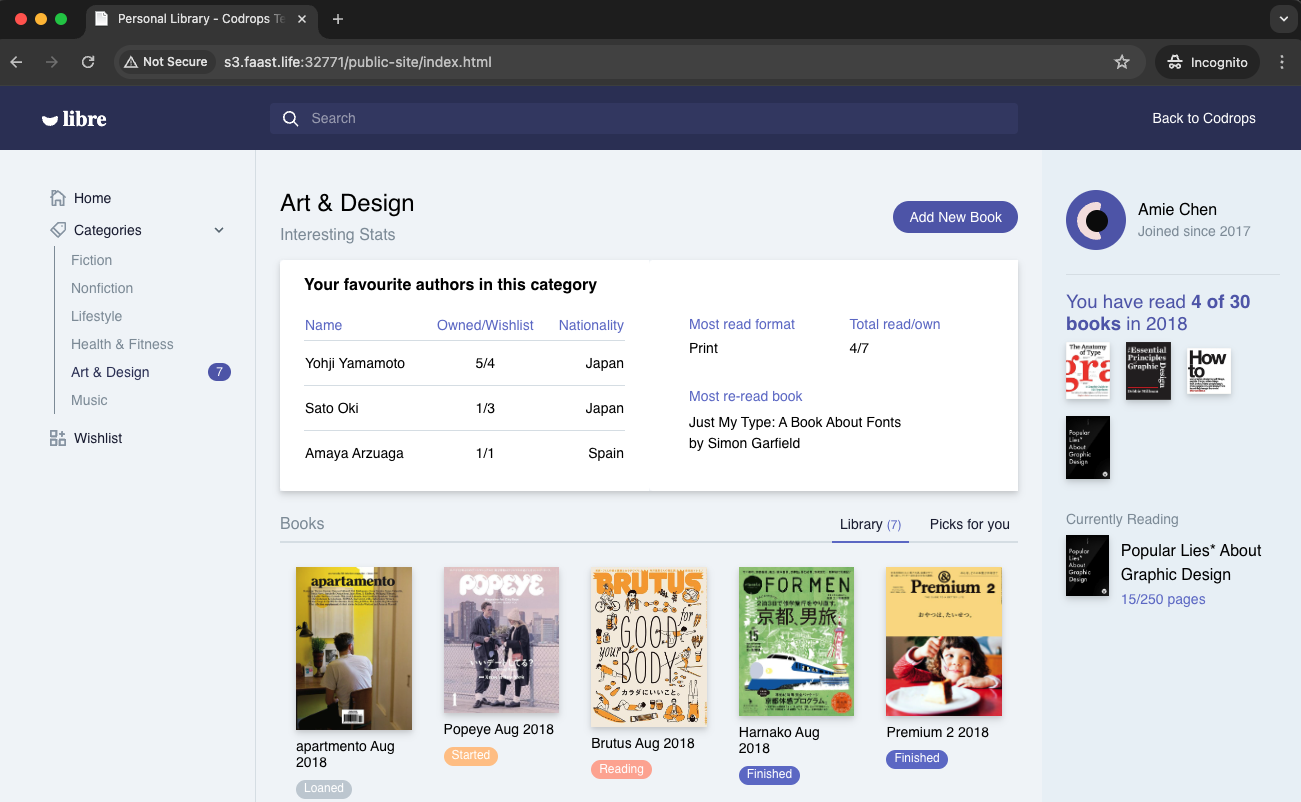

In this project, I will deploy a static website on Minio, an Amazon S3 alternative. My goal is to use this S3-compatible storage as the backend for my website. To ensure the app can scale globally and handle high traffic, I will use Fastly Compute to distribute and cache the content.

Upload Files to Minio S3

This will provide a public URL for accessing the stored content. However, since Minio is self-hosted, it may not handle a high volume of traffic efficiently on its own.

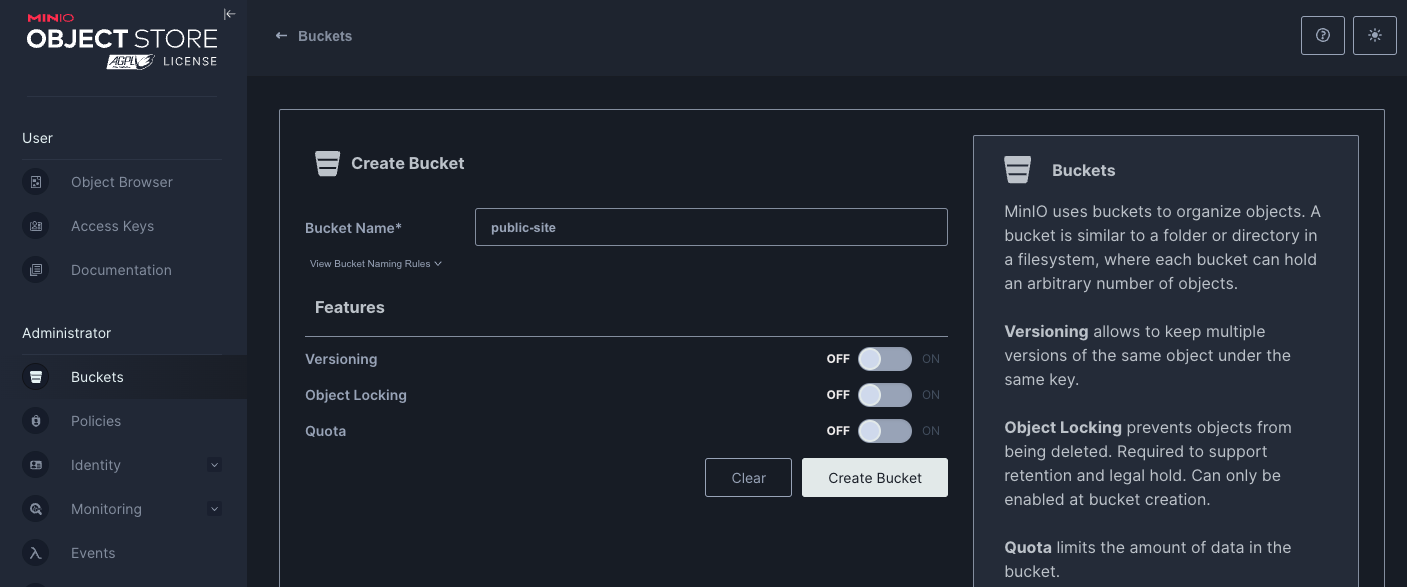

First, create a bucket in Minio and configure it to be publicly accessible for read-only operations.

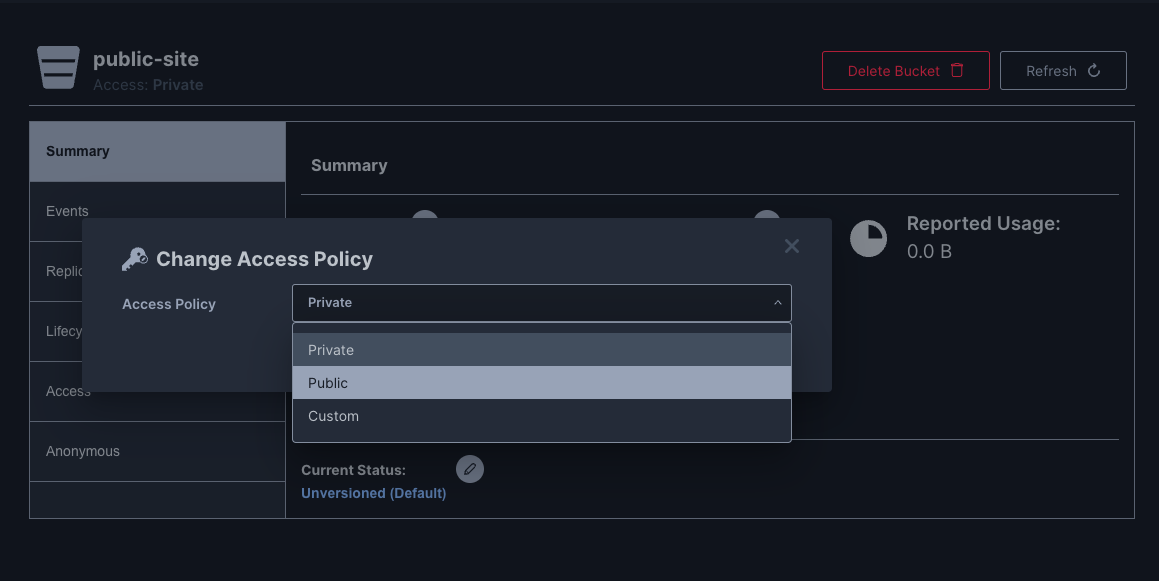

Then I change the Access Policy to be public.

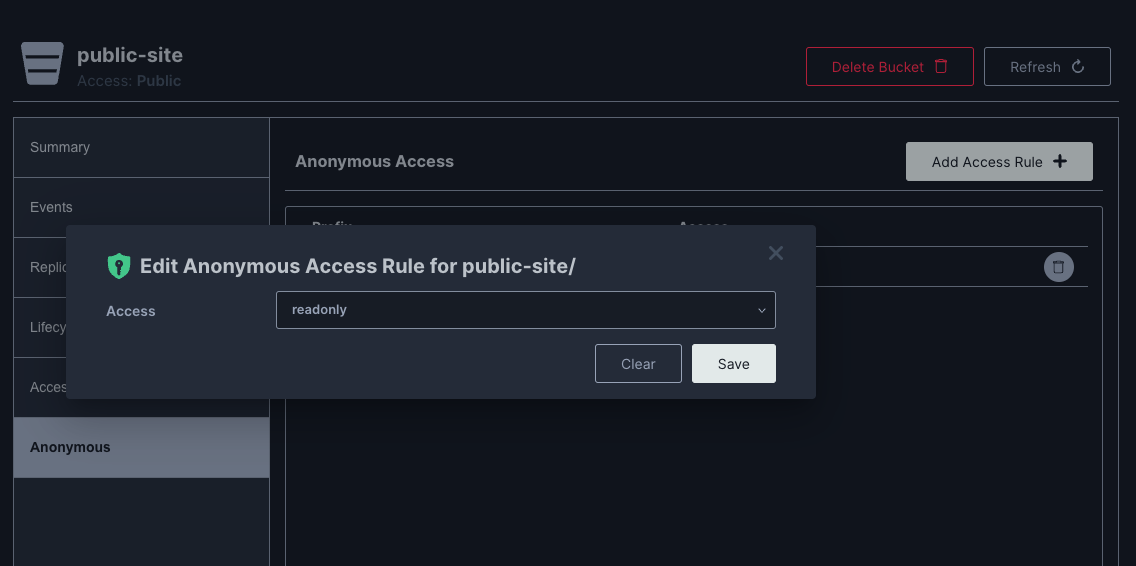

Then I set the anonymous Access Rule to readonly

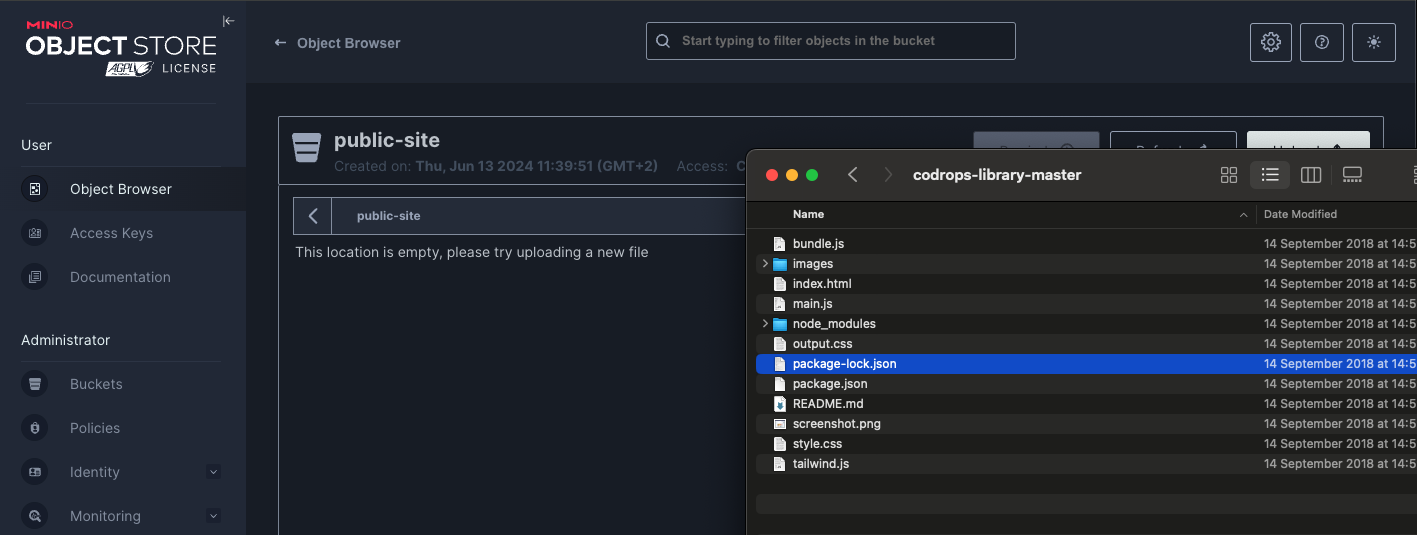

Then I upload the files :

I can now access my site on my S3 bucket

http://s3.faast.life:32771/public-site/index.html

Use Fastly Compute to Serve the App Globally

The advantage of using Fastly Compute is that it enables global distribution and caching of the site hosted on Minio. By leveraging Fastly’s network, we can ensure that the content is served quickly to users around the world, effectively handling high traffic volumes and improving the site’s performance.

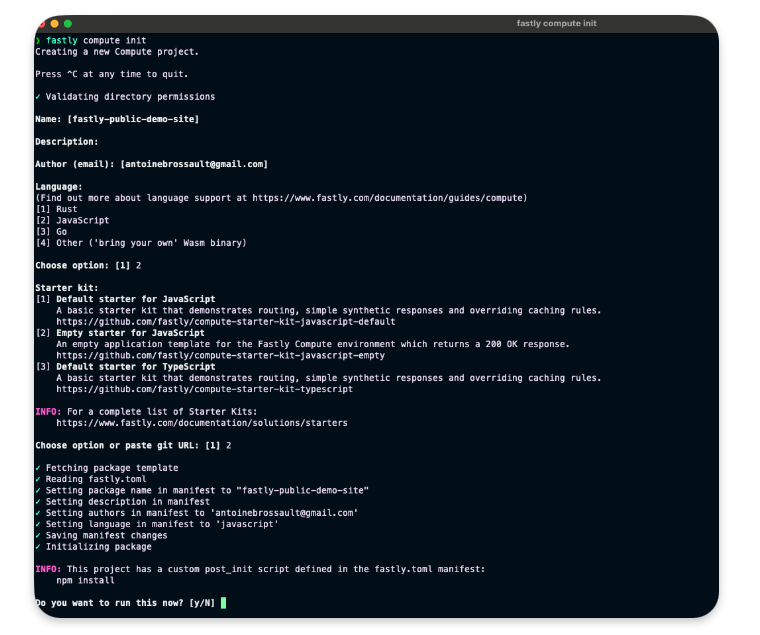

Init the compute project

In the folder of your choice :

fastly compute init

Then for the options

Language:

[2] JavaScript

...

Starter kit:

[2] Empty starter for JavaScript

...

Do you want to run this now?

Yes

Adjustment to our setup

In your package.json add this line in the scripts section :

"dev": "fastly compute serve --watch",

Run the project locally

run the following command to start the local server.

npm run dev

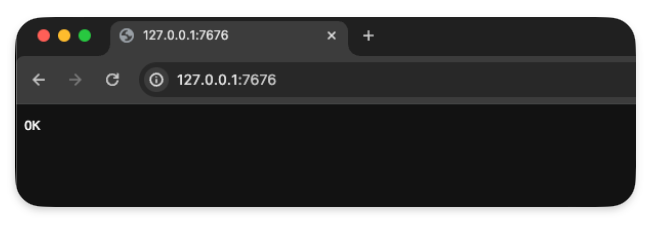

Now if you navigate to :

http://localhost:7676/

You should see something like this :

Handle requests

To handle requests, the best way is to use the @fastly/expressly that will give us a router similar to express to manage our routes.

npm install @fastly/expressly

Then use the following code

import { Router } from "@fastly/expressly";

const router = new Router();

router.get("/", async (req, res) => {

res.send("Hello 👋");

});

router.listen();

This should return « Hello 👋 » when you visit http://localhost:7676/

Connect our S3 backend

Now I want our compute function to query our S3 bucket on this url http://s3.faast.life:32771/public-site/index.html when we go to http://localhost:7676/

Add the backend to the fastly.toml file :

[local_server]

[local_server.backends]

[local_server.backends.s3_faast_life]

override_host = "s3.faast.life"

url = "http://s3.faast.life:32771"

Call your backend

router.get("/", async (req, res) => {

let beResp = await fetch(

"http://s3.faast.life:32771/public-site/index.html",

{

backend: "s3_faast_life"

}

);

res.send(beResp);

});

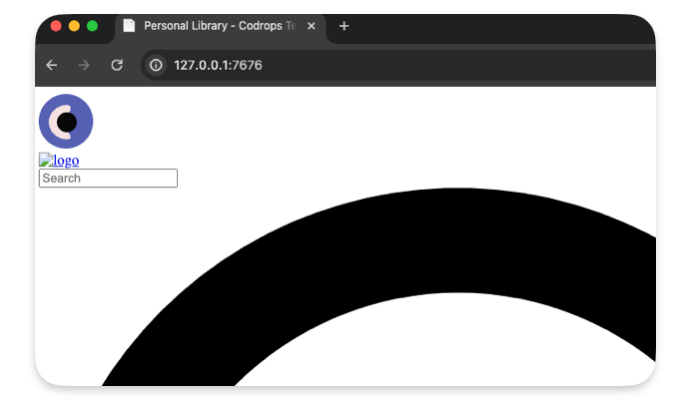

It should display a page with broken CSS /JavaScript… That’s normal, as we don’t handle the css,JavaScript files requests yet.

Handle the JavaScript / CSS / images requests

To make our site work we need to point the asset to the right location.

This following code gets the pathname and match it to our S3 bucket

router.get(/\.(jpe?g|png|gif|jpg|css|js|svg)$/, async (req, res) => {

const pathname = new URL(req.url).pathname;

if(!pathname) res.withStatus(500).json({error : "no pathname"});

let beResp = await fetch(

`http://s3.faast.life:32771/public-site${pathname}`, {

backend: "s3_faast_life"

}

);

res.send(beResp);

});

Deploy the project

It’s now time to deploy the project to the Fastly Network, to do so run the following command :

npm run deploy

❯ npm run deploy

> deploy

> fastly compute publish

✓ Verifying fastly.toml

✓ Identifying package name

✓ Identifying toolchain

✓ Running [scripts.build]

✓ Creating package archive

SUCCESS: Built package (pkg/fastly-public-demo-site.tar.gz)

✓ Verifying fastly.toml

INFO: Processing of the fastly.toml [setup] configuration happens only for a new service. Once a service is

created, any further changes to the service or its resources must be made manually.

Select a domain name

Domain: [inherently-elegant-eft.edgecompute.app] publicSiteDemo.edgecompute.app

✓ Creating domain 'publicSiteDemo.edgecompute.app'

✓ Uploading package

✓ Activating service (version 1)

✓ Creating domain 'publicSiteDemo.edgecompute.app'

✓ Uploading package

✓ Activating service (version 1)

Manage this service at:

https://manage.fastly.com/configure/services/6lyvl2bwrC9smHn3coFbv3

View this service at:

https://publicSiteDemo.edgecompute.app

SUCCESS: Deployed package (service 6lyvl2bwrC9smHn3coFbv3, version 1)

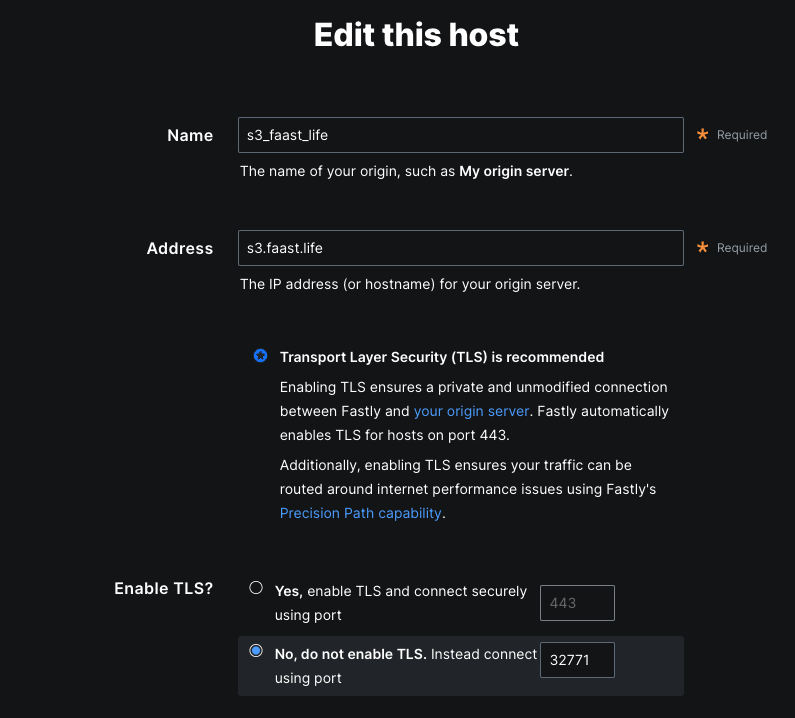

Edit the backend

We need to create a backend that will reflect the options we used with our local configuration.

Save the configuration

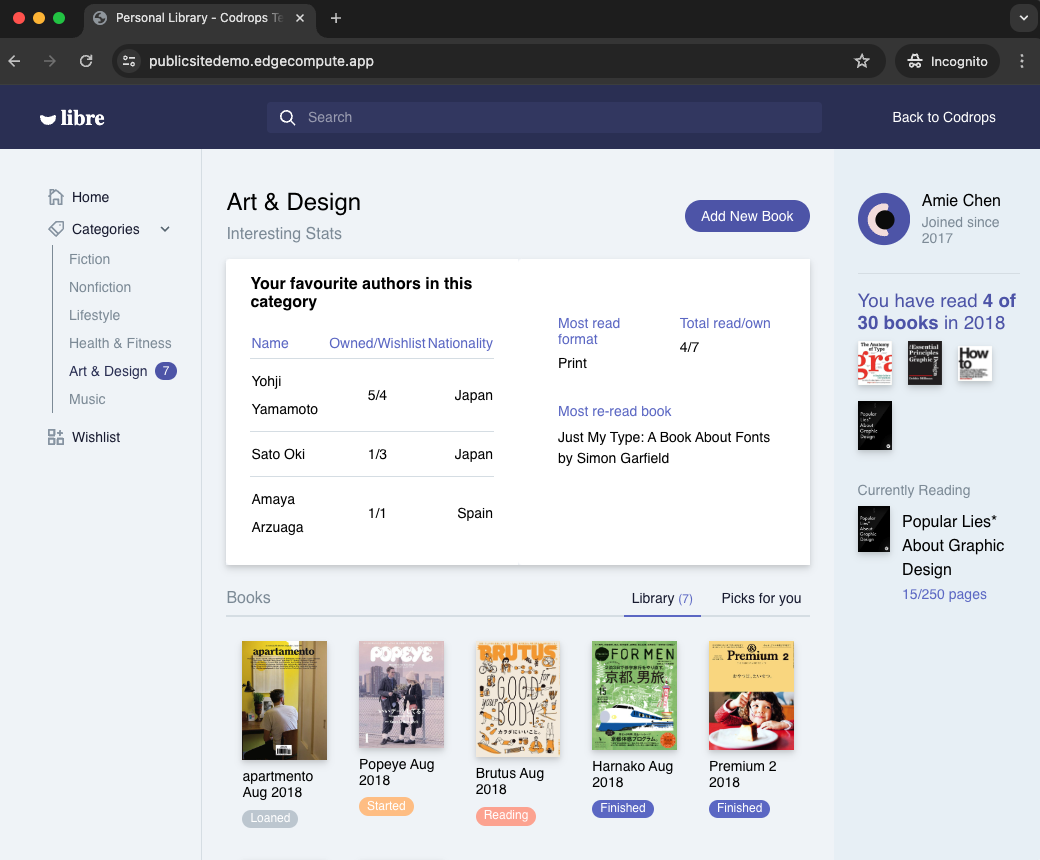

Test the deployment

Now we can visit our website to check the deployement

https://publicsitedemo.edgecompute.app/

If everything worked as expected, we should see our site :

Use Fastly core cache to scale

So far we only forward the requests to our S3 bucket, but this doesn’t really help to scale, that’s why we need to add some caching by using the Fastly core cache

Add caching

import { CacheOverride } from "fastly:cache-override";

Update our backend calls

Now let’s add caching with the CacheOverride object.

Keep the index.html in cache for 10min :

router.get("/", async (req, res) => {

let beResp = await fetch(

"http://s3.faast.life:32771/public-site/index.html",

{

backend: "s3_faast_life",

cacheOverride: new CacheOverride("override", {

ttl: 60 * 10 // cache this request for 10min

})

},

);

res.send(beResp);

});

And we do the same thing for the assets :

router.get(/\.(jpe?g|png|gif|jpg|css|js|svg)$/, async (req, res) => {

const pathname = new URL(req.url).pathname;

if(!pathname) res.withStatus(500).json({error : "no pathname"});

let beResp = await fetch(

`http://s3.faast.life:32771/public-site${pathname}`, {

backend: "s3_faast_life",

cacheOverride: new CacheOverride("override", {

ttl: 60 * 10 // cache this request for 10min

})

}

);

res.send(beResp);

});

Check if our content is cached :

curl -sSL -D - "https://publicsitedemo.edgecompute.app/" -o /dev/null

This should return

HTTP/2 200

accept-ranges: bytes

x-served-by: cache-par-lfpg1960086-PAR

content-type: text/html

etag: "47b56ea2f1770dc224f2047b30c57d15"

last-modified: Thu, 13 Jun 2024 09:44:52 GMT

server: MinIO

strict-transport-security: max-age=31536000; includeSubDomains

vary: Origin, Accept-Encoding

x-amz-id-2: dd9025bab4ad464b049177c95eb6ebf374d3b3fd1af9251148b658df7ac2e3e8

x-amz-request-id: 17D899918B3C2797

x-content-type-options: nosniff

x-xss-protection: 1; mode=block

date: Thu, 13 Jun 2024 15:21:48 GMT

age: 3098

x-cache: HIT

x-cache-hits: 4

content-length: 26217

The content is served from the Paris’ POP :

x-served-by: cache-par-lfpg1960086-PAR

x-cache: HIT

x-cache-hits: 4

Add compression to our static files

By default our text based files, HTML, CSS, JavaScript… are not compressed by our S3 bucket. We can activate compression at our compute level by simple adding a x-compress-hint header. This will speed up our website.

router.use((req, res) => {

// Activate compression on all requests

res.headers.set("x-compress-hint", "on");

});